I am concerned that Gamefication obfuscates the real issue in learning:

Transfer.

Is there evidence that game-based learning leads to far transfer?

Without learning transfer, it doesn’t matter how a person learned–whether from a piece of software, watching an expert, rote memorization, or the back of cereal box. What is important is that learning occurred, and how we know that learning occurred.

This leads to issues of assessment and evaluation.

Transfer and Games:Â How do we assess this?

The typical response from gameficators is that assessment does not measure the complex learning from games. I have been there and said that. Â But I have learned that is overly dismissive of assessment and utterly simplistic. As I investigated assessment and psychometrics, I have learned how simplistic were my statements. Games themselves are assessment tools, and I learned that by learning about assessment.

This Gameficator–(yes I am now going to 3rd person)–had to seek knowledge beyond his ludic and narratological powers. He had to learn the mysteries and great lore of psychometrics, instructional design, and educational psychology. It was through this journey into the dark arts, that he has been able to overcome some of the traps of Captain Obvious, and his insidious powers to sidetrack and obfuscate through jargonification,and worse–like taking credit for previously documented phenomena by imposing a new name. . . kind of like my renaming Canada to the now improved, muchmorebetter name: Candyland.

In reality, I had to learn the language and content domain of learning to be an effective instructional designer, just as children learn the language and content domains of science, literature, math, and civics.

What I have learned through my journey, is that if a learner cannot explain a concept, that this inability to explain and demonstrate may be an indicator that the learning from the game experience is not crystallized–that the learner does not have a conceptual understanding that can be explained or expressed by that learner.

Do games deliver this?

Does gamefied learning deliver this?

Gameficators need to address this if this is to be more than a captivating trend.

I am fearful that the good that comes from this trend will not matter if we are only creating  jargonification (even if it is more accessible). It is not enough to redescribe an established instructional design technique if we cannot demonstrate its effectiveness.  We should be asking how learning in game-like contexts enhances learning and how to measure that.

Measurement and testing are important because well-designed tests and measures can deliver an assessment of crystallized knowledge–does the learner have a chunked conceptual understanding of a concept, such as “resistance”, “average”, “setting”?

Measurement and testing are important because well-designed tests and measures can deliver an assessment of crystallized knowledge–does the learner have a chunked conceptual understanding of a concept, such as “resistance”, “average”, “setting”?

Sure, students may have a gist understanding of a concept. The teacher may even see this qualitatively, but the student cannot express it in a test. So is that comprehension? I think not. The point of the test is an un-scaffolded demonstration of comprehension.

Maybe the game player has demonstrated the concept of “resistance” in a game by choosing a boat with less resistance to go faster in the game space– but this is not evidence that they understand the term conceptually. This example from a game may be an important aspect of perceptual learning, that may lead to the eventual ability to explain (conceptual learning); but it is not evidence of comprehension of “resistance” as a concept.

So when we look at game based learning, we should be asking what it does best, not whether it is better. Games can become a type of assessment where contextual knowledge is demonstrated. But we need to go beyond perceptual knowledge–reacting correctly in context and across contexts–to conceptual knowledge: the ability to explain and demonstrate a concept.

My feeling is that one should be skeptical of Gameficators that pontificate without pointing to the traditions in educational research. If Gamefication advocates want to influence education and assessment, they should attempt to learn the history, and provide evidential differences from the established terms they seek  to replace.

A darker shadow is cast

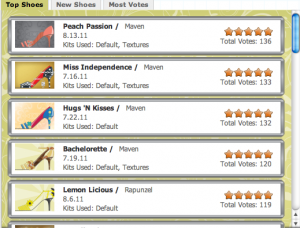

Another concern I have is that  perhaps not all gameficators are created equal. Maybe some gameficators are not really advocatingfor the game elements that are really fundamental aspects of games? Maybe there are people with no ludic-narratological powers donning the cape of gamefication! I must ask, is this leaderboard what makes for “gameness”? Or does a completion task-bar make for gamey experience?

Maybe there are people with no ludic-narratological powers donning the cape of gamefication! I must ask, is this leaderboard what makes for “gameness”? Or does a completion task-bar make for gamey experience?

Does this leaderboard for shoe preference tell us anything but shoe preference? Where is the game?

Are there some hidden game mechanics that I am failing to apprehend?

Is this just villainy?

Here are a couple of ideas that echo my own concerns:

It seems to me, that:

- some of the elements of gamefication rely heavily on aspects of games that are not new, just rebranded.

- some of the elements of gamefication aren’t really “gamey”.

What I like about games is that games can provide multimodal experiences, and provide context and prior knowledge without the interference of years of practice-this is new. Games accelerate learning by reducing the some of the gatekeeping issues. Now a person without the strength, dexterity and madness can share in some of the experience of riding skateboard off a ramp.  The benefit of games may be in the increased richness of a virtual interactive experience,  and provide the immediate task competencies without the time required to become competent. Games provide a protocol, expedite, and scalffold players toward a fidelity of conceptual experience.

For example, in RIDE, the player is immediately able to do tricks that require many years of practice because the game exaggerates the fidelity of experience. Â This cuts the time it might take to develop conceptual knowledge but reducing the the necessity of coordination, balance, dexterity and insanity to ride a skateboard up a ramp and do a trick in the air and then land unscathed.

Gameficators might embrace this notion.

Games can enhance learning in a classroom, and enhance what is already called experiential learning.

My point:

words, terms, concepts, and domain praxis matter.

Additionally, we seem to be missing the BIG IDEA:

- that the way we structure problems is likely predictive of a successful solutionHerb Simon expressed the idea in his book The Sciences of the Artificial this way:

“solving a problem simply means representing it so as to make the solution transparent.” (1981: p. 153)

Games can help structure problems.

But do they help learners understand how to structure a problem. Do they deliver conceptualized knowledge? Common vocabulary indicative of a content domain?

The issue is really about What Games are Good at Doing, not whether they are better than some other traditional form of instruction.

Do they help us learn how to learn? Do they lead to crystallized conceptual knowledge that is found in the use of common vocabulary? In physics class, when we mention “resistance”, students should offer more than

“Resistance is futile”

or, a correct but non-applicable answer such as “an unwillingness to comply”.

The key is that the student can express the expected knowledge of the content domain in relation to the word. This is often what we test for. So how do games lead to this outcome?

I am not hear to give gameficators a hard time–I have a gamefication badge (self-created)–but I would like to know that when my learning is gamefied that the gameficator had some knowledge from the last century of instructional design and learning research, just as I want my physics student to know that resistance as an operationalized concept in science, not a popular cultural saying from the BORG.

The concerns expressed here are applicable in most learning contexts. But if we are going to advocate the use games, then perhaps we should look at how good games are effective, as well as how good lessons are structured. Perhaps more importantly,

- we may need to examine our prevalent misunderstandings about learning assessment;

- perhaps explore the big ideas and lessons that come from years educational psychology research, rather than just renaming and creating new platform without realizing or acknowledging, that current ideas in gamefication stand on the established shoulders of giants from a century of educational research.

Jargonification is a big concern. So lets make our words matter and also look back as we look forward. Games are potentially powerful tools for learning, but not all may be effective in every purpose or context. What does the research say?

I am hopeful gamefication delivers a closer look at how game-like instructional design may enhance successful learning.

I don’t think anyone would disagree — fostering creativity should be a goal of classroom learning.

However, the terms creativity and innovation are often misused. When used they typically imply that REAL learning cannot be measured. Fortunately, we know A LOT about learning and how it happens now. It is measurable and we can design learning environments that promote it. It is the same with creativity as with intelligence–we can promote growth in creativity and intelligence through creative approaches to pedagogy and assessment. Because data-driven instruction does not kill creativity, it should promote it.

One of the ways we might look at creativity and innovation is through the much maligned tradition of intelligence testing as described in the Wikipedia:

Fluid intelligence or fluid reasoning is the capacity to think logically and solve problems in novel situations, independent of acquired knowledge. It is the ability to analyze novel problems, identify patterns and relationships that underpin these problems and the extrapolation of these using logic. It is necessary for all logical problem solving, especially scientific, mathematical and technical problem solving. Fluid reasoning includes inductive reasoning and deductive reasoning, and is predictive of creativity and innovation.

Crystallized intelligence is indicated by a person’s depth and breadth of general knowledge, vocabulary, and the ability to reason using words and numbers. It is the product of educational and cultural experience in interaction with fluid intelligence and also predicts creativity and innovation.

The Myth of Opposites

Creativity and intelligence are not opposites. It takes both for innovation.

What we often lack are creative ways of measuring learning growth in assessments. When we choose to measure growth in summative evaluations and worksheets over and over , we nurture boredom and kill creativity.

To foster creativity, we need to adopt and implement pedagogy and curriculum that promotes creative problems solving, and also provides criteria that can measure creative problem solving.

What is needed are ways to help students learn content in creative ways through the use of creative assessments.

We often confuse the idea of  learning creatively with trial and error and play, free of any kind of assessment–that somehow the Mona Lisa was created through just free play and doodling. That somehow assessment kills creativity.  Assessment provide learning goals.

Without learning criteria, students are left to make sense of the problem put before them with questions like “what do I do now?” (ad infinitum).

The role of the educator is to design problems so that the solution becomes transparent. This is done through providing information about process, outcome, and quality criteria . . . assessment, is how it is to be judged. For example, “for your next assignment, I want a boat that is beautiful and  that is really fast. Here are some examples of boats that are really fast.  Look at the hull, the materials they are made with, etc. and design me a boat that goes very fast and tell me why it goes fast. Tell me why it is beautiful.” Now use the terms from the criteria. What is beautiful? Are you going to define it? How about fast? Fast compared to what? These open-ended, interest-driven, free play assignments might be motivating, but they lead to quick frustration and lots of “what do I do now?”

But play and self-interest arte not the problem here. The problem is the way we are approaching assessment.

Although play is described as a range of voluntary, intrinsically motivated activities normally associated with recreational pleasure and enjoyment; Pleasure and enjoyment still come from judgements about one’s work–just like assessment–whether finger painting or creating a differential equation. The key feature here is that play seems to involve self-evaluation and discovery of key concepts and patterns. Assessments can be constructed to scaffold and extend this, and this same process can be structured in classrooms through assessment criteria.

Every kind of creative play activity has evaluation and self-judgement: the individual is making judgements about pleasure, and often why it is pleasurable. This is often because they want to replicate this pleasure in the future, and oddly enough, learning is pleasurable. So when we teach a pleasurable activity, the learning may be pleasurable. This means chunking the learning and concepts into larger meaning units such as complex terms and concepts, which represent ideas, patterns, objects, and qualities. Thus, crystallized intelligence can be constructed through play as long as the play experience is linked and connected to help the learner to define and comprehend the terms (assessment criteria). So when the learner talks about their boat, perhaps they should be asked to sketch it first, and then use specific terms to explain their design:

Bow is the frontmost part of the hull

Stern is the rear-most part of the hull

Port is the left side of the boat when facing the Bow

Starboard is the right side of the boat when facing the Bow

Waterline is an imaginary line circumscribing the hull that matches the surface of the water when the hull is not moving.

Midships is the midpoint of the LWL (see below). It is half-way from the forwardmost point on the waterline to the rear-most point on the waterline.

Baseline an imaginary reference line used to measure vertical distances from. It is usually located at the bottom of the hull

Along with the learning activity and targeted learning criteria and content, the student should be asked a guiding question to help structure their description.

So, how do these parts affect the performance of the whole?

Additionally, the learner should be adopting the language (criteria) from the rubric to build comprehension. Taking perception, experience, similarities and contrasts to understand Bow and Stern, or even Beauty.

Experiential Learning for Fluidity and Crystallization

What the tradition of intelligence offers is an insight as to how an educator might support students. What we know is that intelligence is not innate. It can change through learning opportunities. The goal of the teacher should be to provide experiential learning that extends Fluid Intelligence, through developing problem solving, and link this process to crystallized concepts in vocabulary terms that encapsulate complex process, ideas, and description.

The real technology in a 21st Century Classroom is in the presentation and collection of information. It is the art of designing assessment for data-driven decision making. The role of the teacher should be in grounding crystallized academic concepts in experiential learning with assessments the provide structure for creative problem solving. The teacher creates assessments where the learning is the assessment. The learner is scaffolded through the activity with guidance of assessment criteria.

A rubric, which provides criteria for quality and excellence can scaffold creativity innovation, and content learning simultaneously. A well-conceived assessment guides students to understand descriptions of quality and help students to understand crystallized concepts.

An example of a criteria-driven assessment looks like this:

| Purpose & Plan | Isometric Sketch | Vocabulary | Explanation | |

| Level up | Has identified event and hull design with reasoning for appropriateness. | Has drawn a sketch where length, width, and height are represented by lines 120 degrees apart, with all measurements in the same scale. | Understanding is clear from the use of five key terms from the word wall to describe how and why the boat hull design will be successful for the chosen event. | Clear connection between the hull design, event, sketch, and important terms from word wall and next steps for building a prototype and testing. |

| Approaching | Has chosen a hull that is appropriate for event but cannot connect the two. | Has drawn Has drawn a sketch where length, width, and height are represented. | Uses five key terms but struggles to demonstrate understanding of the terms in usage. | Describes design elements, but cannot make the connection of how they work together. |

| Do it again | Has chosen a hull design but it may not be appropriate for the event. | Has drawn a sketch but it does not have length, width, and height represented. | Does not use five terms from word wall. | Struggles to make a clear connection between design conceptual design stage elements. |

What is important about this rubric is that it guides the learner in understanding quality and assessment. It also familiarizes the learner with key crystallized concepts as part of the assessment descriptions. In order to be successful in this playful, experiential activity (boat building),  the learner must learn to comprehend and demonstrate knowledge of the vocabulary scattered throughout the rubric such as: isometric, reasoning, etc. This connection to complex terminology grounded with experience is what builds knowledge and competence. When an educator can coach a student connecting their experiential learning with the assessment criteria, they construct crystallized intelligence through grounding the concept in experiential learning, and potentially expand fluid intelligence through awareness of new patterns in form and structure.

Play is Learning, Learning is Measurable

Just because someone plays, or explores does not mean this learning is immeasurable. The truth is, research on creative breakthroughs demonstrate that authors of great innovation learned through years of dedicated practice and were often judged, assessed, and evaluated.  This feedback from their teachers led them to new understanding and new heights. Great innovators often developed crystallized concepts that resulted from experience in developing fluid intelligence. This can come from copying the genius of others by replicating their breakthroughs; it comes from repetition and making basic skills automatic, so that they could explore the larger patterns resulting from their actions. It was the result of repetition and exploration, where they could reason, experiment, and experience without thinking about the mechanics of their actions.  This meant learning the content and skills from the knowledge domain and developing some level of automaticity. What sets an innovator apart it seems, is tenacity and being playful in their work, and working hard at their play.

According to Thomas Edison:

During all those years of experimentation and research, I never once made a discovery. All my work was deductive, and the results I achieved were those of invention, pure and simple. I would construct a theory and work on its lines until I found it was untenable. Then it would be discarded at once and another theory evolved. This was the only possible way for me to work out the problem. … I speak without exaggeration when I say that I have constructed 3,000 different theories in connection with the electric light, each one of them reasonable and apparently likely to be true. Yet only in two cases did my experiments prove the truth of my theory. My chief difficulty was in constructing the carbon filament. . . . Every quarter of the globe was ransacked by my agents, and all sorts of the queerest materials used, until finally the shred of bamboo, now utilized by us, was settled upon.

On his years of research in developing the electric light bulb, as quoted in “Talks with Edison” by George Parsons Lathrop in Harpers magazine, Vol. 80 (February 1890), p. 425

So when we encourage kids to be creative, we must also understand the importance of all the content and practice necessary to creatively breakthrough. Edison was taught how to be methodical, critical, and observant. He understood the known patterns and made variations. It is important to know the known forms to know the importance of breaking forms. This may inv0lve copying someone else’s design or ideas. Thomas Edison also speaks to this when he said:

Everyone steals in commerce and industry. I have stolen a lot myself. But at least I know how to steal.

Edison stole ideas from others, (just as Watson and Crick were accused of doing). The point Watson seems to be making here is that he knew how to steal, meaning, he saw how the parts fit together. He may have taken ideas from a variety of places, but he had the knowledge, skill, and vision to put them together. This synthesis of ideas took awareness of the problem, the outcome, and how things might work. Lots and lots of experience and practice.

To attain this level of knowledge and experience, perhaps stealing ideas, or copying and imitation are not a bad idea for classroom learning? However copying someone else in school is viewed as cheating rather than a starting point. Perhaps instead, we can take the criteria of examples and design classroom problems in ways that allow discovery and the replication of prior findings (the basis of scientific laws). It is often said that imitation is the greatest form of flattery. Imitation is also one of the ways we learn. In the tradition of play research, mimesis is imitation–Aristotle held that it was “simulated representation”.

The Role of Play and Games

In close, my hope is that we not use the terms “creativity” and “innovation” as suitcase words to diminish such things as minimum standards. We need minimum standards.

But when we talk about teaching for creativity and innovation, where we need to start is the way that we gather data for assessment. Often assessments are unimaginative in themselves. They are applied in ways that distract from learning, because they have become the learning. One of the worst outcomes of this practice is that students believe that they are knowledgeable after passing a minimum standards test. This is the soft-bigotry of low expectation. Assessment should be adaptive, criteria driven, and modeled as a continuous improvement cycle.

This does not mean that we must  drill and kill kids in grinding mindless repetition. Kids will grind towards a larger goal where they are offered feedback on their progress. They do it in games.

Games are structured forms of play. They are criteria driven, and by their very nature, games assess, measure, and evaluate. But they are only as good as their assessment criteria.

These concepts should be embedded in creative active inquiry that will allow the student to embody their learning and memory. However, many of the creative, inquiry-based lessons I have observed tend to ignore the focus of academic language–the crystallized concepts. Such as, “what is fast?”, “what is beauty”, Â “what is balance?”, or “what is conflict?” The focus seems to be on interacting with content rather than building and chunking the concepts with experience. When Plato describes the world of forms, and wants us to understand the essence of the chair, i.e., “what is chairness?” We may have to look at a lot of chairs to understand chairness. Â Bu this is how we build conceptual knowledge, and should be considered when constructing curriculum and assessment. A guiding curricular question should be:

How does the experience inform the concepts in the lesson?

There is a way to use data-driven instruction in very creative lessons, just like the very unimaginative drill and kill approach. Teachers and assessment coordinators need to take the leap and learn to use data collection in creative ways in constructive assignments that promote experiential learning with crystallized academic concepts.

If you have kids make a diorama of a story, have them use the concepts that are part of the standards and testing: Plot, Character, Theme, Setting, ETC. Make them demonstrate and explain. If you want kids to learn the physics have them make a boat and connect the terms through discovery. Use their inductive learning and guide them to conceptual understanding.This can be done through the use of informative assessments, such as with rubrics and scales for assessment.  Evaluation and creativity are not contradictory or mutually exclusive. These seeming opposites are complementary, and can be achieved through embedding the crystallized, higher order concepts into meaningful work.