In order to insure the best user experience for Rainbow Six: Siege, the Montreal User Research Lab had to re think the way they tested the game, bringing playtests and analytics to a whole new level: the live test experience.

Using their knowledge and experience on the Rainbow Six Siege production, the speakers will:

• Present succinctly the Rainbow Six franchise and its history

• Describe user research challenges of a multiplayer focused game

• Discuss the limitations of classic user tests

• Introduce live tests – Closed Alpha, Closed Beta, and Open Beta

o The impacts on the user research, analytics, and production pipelines

o The impacts on reporting and how user research analytics helped improve the game

• Show the changes in terms of communication to all parties

• Give insights on the first months post launch, and potentially how post launch data is or can be used as live test data.

CFP: eSports and professional game play III

The purpose of this third special issue is to explore, discover and share insights and research in eSports.

Authors are invited to submit manuscripts that

- Examine the emergence of eSports

- The uses of streaming technology

- Traditions of games that support professional players– chess, go, bridge, poker, league of legends, Dota 2, Starcraft

- Fan perspectives

- Professional player perspectives

- Market analysis

- Meta-analyses of existing research on eSports

- Answer specific questions such as:

- Case studies, worked examples, empirical and phenomenological, application of psychological and humanist approaches?

- Field research

- Face to face interviewing

- Creation of user tests

- Gathering and organizing statistics

- Define Audience

- User scenarios

- Creating Personas

- Product design

- Feature writing

- Requirement writing

- Content surveys

- Graphic Arts

- Interaction design

- Information architecture

- Process flows

- Usability

- Prototype development

- Interface layout and design

- Wire frames

- Visual design

- Taxonomy and terminology creation

- Copywriting

- Working with programmers and SMEs

- Brainstorm and managing scope (requirement) creep

- Design and UX culture

Potential authors are encouraged to contact Brock Dubbels (Dubbels@mcmaster.ca) to ask about the appropriateness of their topic.

Deadline for Submission September 15, 2017.

Authors should submit their manuscripts to the submission system using the following link:

http://www.igi-global.com/authorseditors/titlesubmission/newproject.aspx

(Please note authors will need to create a member profile in order to upload a manuscript.)

Manuscripts should be submitted in APA format.

They will typically be 5000-8000 words in length.

Full submission guidelines can be found at: http://www.igi-global.com/journals/guidelines-for-submission.aspx

Mission – IJGCMS is a peer-reviewed, international journal devoted to the theoretical and empirical understanding of electronic games and computer-mediated simulations. IJGCMS publishes research articles, theoretical critiques, and book reviews related to the development and evaluation of games and computer-mediated simulations. One main goal of this peer-reviewed, international journal is to promote a deep conceptual and empirical understanding of the roles of electronic games and computer-mediated simulations across multiple disciplines. A second goal is to help build a significant bridge between research and practice on electronic gaming and simulations, supporting the work of researchers, practitioners, and policymakers.

Olivier Guedon

Olivier Guédon is a Game Intelligence Analyst with Ubisoft’s Montreal User Research Lab. His experience in the video game industry started at Ubisoft Montreal in 2007 in information risk managementfor the production teams of brands like Assassin’s Creed or Far Cry. He went to risk analytics to come back to Ubisoft at the User Research Lab in 2014 to lead the analytics efforts for the Rainbow Six Siege production.

Join us at the next GURsig, submit a talk!

Game User Research Summit 2017 call for papers

Learn more about Game User Research Special interest Group (GURsig):

About the Game User Research sig at IGDA

Jakob Nielson says:

The Games User Research Summit was a great conference

with many insightful talks by top professionals.

I am pleased to provide you with the official announcement for the latest issue of the International Journal of Gaming and Computer-Mediated Simulations 8(2). Please post it to the appropriate listservs, websites, media outlets, etc. to further increase the awareness of the latest issue of IJGCMS. This is a special issue on Transmedia Story Telling, lead by our Special Issue Guest Editor, Dr. Karen Schrier

http://www.igi-global.com/journals/abstract-announcement/131817

Enjoy!

CFP: eSports and professional game play

The purpose of this special issue is to investigate the rise of eSports.

Much has happened in the area of professional gaming since the Space Invaders Championship of 1980. We have seen live Internet streaming eclipse televised eSports events, such as on the American show Starcade.

Authors are invited to submit manuscripts that

- Examine the emergence of eSports

- The uses of streaming technology

- Traditions of games that support professional players – chess, go, bridge, poker, league of legends, Dota 2, Starcraft

- Fan perspectives

- Professional player perspectives

- Market analysis

- Meta-analyses of existing research on eSports

- Answer specific questions such as:

- How should game user research examine the emergence of eSports? Should we differentiate pragmatic and hedonic aspects of the game?

- What are the methodologies for conducting research on eSports?

- What is the role of player, the audience, the developer, the venue?

- Case studies, worked examples, empirical and phenomenological, application of psychological and humanist approaches?

- Field research

- Face to face interviewing

- Creation of user tests

- Gathering and organizing statistics

- Define Audience

- User scenarios

- Creating Personas

- Product design

- Feature writing

- Requirement writing

- Content surveys

- Graphic Arts

- Interaction design

- Information architecture

- Process flows

- Usability

- Prototype development

- Interface layout and design

- Wire frames

- Visual design

- Taxonomy and terminology creation

- Copywriting

- Working with programmers and SMEs

- Brainstorm and managing scope (requirement) creep

- Design and UX culture

Potential authors are encouraged to contact Brock R. Dubbels (Dubbels@mcmaster.ca) to ask about the appropriateness of their topic.

Deadline for Submission July 2016.

Authors should submit their manuscripts to the submission system using the following link:

http://www.igi-global.com/authorseditors/titlesubmission/newproject.aspx

(Please note authors will need to create a member profile in order to upload a manuscript.)

Manuscripts should be submitted in APA format.

They will typically be 5000-8000 words in length.

Full submission guidelines can be found at: http://www.igi-global.com/journals/guidelines-for-submission.aspx

Mission – IJGCMS is a peer-reviewed, international journal devoted to the theoretical and empirical understanding of electronic games and computer-mediated simulations. IJGCMS publishes research articles, theoretical critiques, and book reviews related to the development and evaluation of games and computer-mediated simulations. One main goal of this peer-reviewed, international journal is to promote a deep conceptual and empirical understanding of the roles of electronic games and computer-mediated simulations across multiple disciplines. A second goal is to help build a significant bridge between research and practice on electronic gaming and simulations, supporting the work of researchers, practitioners, and policymakers.

Can UX research take a game that is not fun, and make it more fun?

CFP: UX — What is User Experience in Video Games?

The purpose of this special issue is to investigate the nature of video game UX.

ISO 9241-210[1] defines user experience as “a person’s perceptions and responses that result from the use or anticipated use of a product, system or service”. According to the ISO definition, user experience includes all the users’ emotions, beliefs, preferences, perceptions, physical and psychological responses, behaviors and accomplishments that occur before, during and after use. The ISO also list three factors that influence user experience: system, user and the context of use.

In this issue we hope to present practitioners and academic perspectives through presenting a broad range of user experience evaluation methods and concepts; application of various user experience evaluation methods; how UX fits into video game development cycle; methods of evaluating user experience during game play and after; and social play.

Authors are invited to submit manuscripts that

- Present empirical findings on UX in game development

- Push the theoretical knowledge of UX

- Conduct meta-analyses of existing research on UX

- Answer specific questions such as:

- Case studies, worked examples, empirical and phenomenological, application of psychological and humanist approaches?

- Field research

- Universal Access

- Face to face interviewing

- Creation of user tests

- Gathering and organizing statistics

- Define Audience

- User scenarios

- Creating Personas

- Product design

- Feature writing

- Requirement writing

- Content surveys

- Graphic Arts

- Interaction design

- Information architecture

- Process flows

- Usability

- Prototype development

- Interface layout and design

- Wire frames

- Visual design

- Taxonomy and terminology creation

- Copywriting

- Working with programmers and SMEs

- Brainstorm and managing scope (requirement) creep

- Design and UX culture

- What is the difference between user experience and usability?

- How does UX research extend beyond examination of the UI? Should we differentiate pragmatic and hedonic aspects of the game?

- Who is a User Experience researcher, what do they do, and how does one become one?

- What are the methodologies?

Potential authors are encouraged to contact Brock Dubbels (Dubbels@mcmaster.ca) to ask about the appropriateness of their topic.

Deadline for Submission January 2014.

Authors should submit their manuscripts to the submission system using the following link:

http://www.igi-global.com/authorseditors/titlesubmission/newproject.aspx

(Please note authors will need to create a member profile in order to upload a manuscript.)

Manuscripts should be submitted in APA format.

They will typically be 5000-8000 words in length.

Full submission guidelines can be found at: http://www.igi-global.com/journals/guidelines-for-submission.aspx

Mission – IJGCMS is a peer-reviewed, international journal devoted to the theoretical and empirical understanding of electronic games and computer-mediated simulations. IJGCMS publishes research articles, theoretical critiques, and book reviews related to the development and evaluation of games and computer-mediated simulations. One main goal of this peer-reviewed, international journal is to promote a deep conceptual and empirical understanding of the roles of electronic games and computer-mediated simulations across multiple disciplines. A second goal is to help build a significant bridge between research and practice on electronic gaming and simulations, supporting the work of researchers, practitioners, and policymakers.

Gamiceuticals: Video Games for medical diagnosis, treatment,

and professional development

Should games and play be used to diagnose or treat a medical condition? Can video games provide professional development for health professionals? To gather medical data? To provide adherence and behavioral change? Or even become a part of our productivity at work? In this presentation psychological research will be presented to make a case for how games are currently, and potentially, can be used in the eHealth and medical sector.

Join MacGDA for a talk with Brock Dubbels on issues related to games, health, and psychology.

Sign up at http://gme.eventbrite.ca/

Sign up at http://gme.eventbrite.ca/

Brock Dubbels is an experimental psychologist at the G-Scale Game development and testing laboratory at McMaster University in Hamilton, Ontario. His appointment includes work in the Dept. of Computing and Software (G-Scale) and the McMaster Research Libraries. Brock specializes in games and software for knowledge and skill acquisition, eHealth, and clinical interventions.

Brock Dubbels has worked since 1999 as a professional in education and instructional design. His specialties include comprehension, problem solving, and game design. From these perspectives he designs face-to-face, virtual, and hybrid learning environments, exploring new technologies for assessment, delivering content, creating engagement with learners, and investigating ways people approach learning. He has worked as a Fulbright Scholar at the Norwegian Institute of Science and Technology; at Xerox PARC and Oracle, and as a research associate at the Center for Cognitive Science at the University of Minnesota. He teaches course work on games and cognition, and how learning research can improve game design for return on investment (ROI). He is also the founder and principal learning architect at www.vgalt.com for design, production, usability assessment and evaluation of learning systems and games.

Join the MacMaster Game Development Association: http://macgda.com/

The eHealth Seminar Series welcomes

Brock Dubbels, PhD

Department of Computing and Software, McMaster University

Abstract:

Do games and play have a place as medical interventions? How can games and play inform designing instruction and assessment contexts? Can games and similar software design per- suade individuals towards healthier lifestyles and adherence? In this overview, research will be presented from studies and experimentation from laboratory and instructional settings as evidence for using games for accelerating learning outcomes, persuasion, medical inter- ventions, as well as professional development and productivity. Games offer individuals a learning environment rich with choice and feedback . . . not only for gathering information about learning, but scaffolding learners towards competence and mastery in recall, comprehension, and problem solving. The difficulty with games may be our view that games are a form of play, an undirected, frivolous children’s activity. In this presentation research and examples of games and play inspired activities will be presented for motivating learners, designing effective instruction, improving comprehension and problem solving, providing therapeutic interventions, aid- ing in work-place productivity, and professional development.

Brock Dubbels Ph.D.is an experimental psychologist at the G-Scale Game development and testing laboratory at McMaster University in Hamilton, Ontario. His appointment includes work in the Dept. of Computing and Software (G-Scale) and the McMaster Research Libraries. Brock specializes in games and software for knowledge and skill acquisition, eHealth, and clinical interventions.

Brock Dubbels has worked since 1999 as a professional in education and instructional design. His specialties include com- prehension, problem solving, and game design. From these perspectives he designs face-to-face, virtual, and hybrid learn- ing environments, exploring new technologies for assessment, delivering content, creating engagement with learners, and investigating ways people approach learning. He has worked as a Fulbright Scholar at the Norwegian Institute of Science and Technology; at Xerox PARC and Oracle, and as a research associate at the Center for Cognitive Science at the Universi- ty of Minnesota. He teaches course work on games and cognition, and how learning research can improve game design for return on investment (ROI). He is also the founder and principal learning architect at www.vgalt.com for design, production, usability assessment and evaluation of learning systems and games.

And rumour has it, he has a sweet bike!

I want to invite you to a colloquium March 7, at 1430– Department of Psychology, Neuroscience & Behaviour (PNB)

at McMaster University

Video Games as Learning Tools

Brock Dubbels, PhD.,

G-ScalE Game Development and Testing Lab

McMaster University

Dubbels@McMaster.ca

Should games and play be considered important in designing instructional contexts? Should they be used for professional development, or even become a part of our productivity at work? Games offer individuals a learning environment rich with choice and feedback . . . not only for gathering information about a student’s learning, but that also demand mastery in recall, comprehension, and problem solving. The difficulty with games may be our view that games are a form of play, an undirected, frivolous children’s activity . In this presentation research and examples of games and play inspired activities will be presented for motivating learners, designing effective instruction, improving comprehension and problem solving, providing therapeutic interventions, aiding in work-place productivity, and professional development.

Department of Psychology, Neuroscience & Behaviour (PNB)

Psychology Building (PC), Room 102

McMaster University

1280 Main Street West

http://www.science.mcmaster.ca/pnb/news-events/colloquium-series-events/details/274-brock-dubbels-tba.html

What we cannot know or do individually, we may be capable of collectively.

My research examines the transformation of perceptual knowledge into conceptual knowledge. Conceptual knowledge can be viewed as crystallized, which means that it has become abstracted and is often symbolized in ways that do not make the associated meaning obvious. Crystallized knowledge is the outcome of fluid intelligence, or the ability to think logically and solve problems in novel situations, independent of acquired knowledge. I investigate how groups and objects may assist in crystallization of knowledge, or the construction of conceptual understanding.

I am currently approaching this problem from the perspective that cognition is externalized and extended through objects and relationships. Â This view posits that skill, competence, knowledge are learned through interaction aided with objects imbued with collective knowledge.

Groups make specialized information available through objects and relationships so that individual members can coordinate their actions and do things that would be hard or impossible for them to enact individually. To examine this, I use a socio-cognitive approach, which views cognition as distributed, where information processing is imbued in objects and communities and aids learners in problem solving.

This socio-cognitive approach is commonly associated with cognitive ethnography and the study of social networks. In particular, I have special interest in how play, games, modeling, and simulations can be used to enhance comprehension and problem solving through providing interactive learning. In my initial observational studies, I have found that games are structured forms of play, which work on a continuum of complexity:

- Pretense, imagery and visualization of micro worlds

- Tools, rules, and roles

- Branching / probability

Games hold communal knowledge, which can be learned through game play. An example of this comes from the board game Ticket to Ride. In this strategy game players take on the role of a railroad tycoon in the early 1900′s. The goal is to build an empire that spans the United States while making shrewd moves that block your opponents from being able to complete their freight and passenger runs to various cities. Game play scaffolds the learner in the history and implications of early transportation through taking on the role of an entrepreneur and learning the context and process of building up a railroad empire. In the course of the game, concept are introduced, with language, and value systems based upon the problem space created by the game mechanics (artifacts, scoring, rules, and language). The game can be analyzed as a cultural artifact containing historical information; a vehicle for content delivery as a curriculum tool; as well as an intervention for studying player knowledge and decision-making.

I have observed that learners interact with games with growing complexity of the game as a system. As the player gains top sight, a view of the whole system, they play with greater awareness of the economy of resources, and in some cases an aesthetic of play. For beginning players, I have observed the following progression:

- Trial and error – forming a mental representation, or situation model of how the roles, rules, tools, and contexts work for problem solving.

- Tactical trials – a successful tactic is generated to solve problems using the tools, rules, roles, and contexts. This tactic may be modified for use in a variety of ways as goals and context change in the game play.

- Strategies—the range of tactics of resulted in strategies that come from a theory of how the game works. This approach to problem solving indicates a growing awareness of systems knowledge, the purpose or criteria for winning, and is a step towards top sight. They understand that there are decision branches, and each decision branch comes with risk reward they can evaluate in the context of economizing resources.

- Layered strategies—the player is now making choices based upon managing resources because they are now economizing resources and playing for optimal success with a well-developed mental representation of the games criteria for winning, and how to have a high score rather than just finish.

- Aesthetic of play—the player understands the system and has learned to use and exploit ambiguities in the rules and environment to play with an aesthetic that sets the player apart from others. The game play is characterized with surprising solutions to the problem space.

For me, games are a structured form of play. As an example, a game may playfully represent an action with associated knowledge, such as becoming a railroad tycoon, driving a high performance racecar, or even raising a family. Games always involve contingent decision-making, forcing the players to learn and interact with cultural knowledge simulated in the game.

Games currently take a significant investment of time and effort to collectively construct. These objects follow in a history of collective construction by groups and communities. Consider the cartography and the creation of a map as an example of collective distributed knowledge imbued in an object. Â According to Hutchins (1996),

“A navigation chart represents the accumulation of more observations than any one person could make in a lifetime. It is an artifact that embodies generations of experience and measurement. No navigator has ever had,nor will one ever have, all the knowledge that is in the chart.â€

A single individual can use a map to navigate an area with competence, if not expertise. Observing an individual learning to use a map, or even construct one is instructive for learning about comprehension and decision-making. Interestingly, games provide structure to play, just as maps and media appliances provide structure to data to create information. Objects such as maps and games are examples of collective knowledge, and are what Vygotsky termed a pivot.

The term pivot was initially conceptualized in describing children’s play, particularly as a toy. A toy is a representation used in aiding knowledge construction in early childhood development. This is the transition where children may move from recognitive play to symbolic and imaginative play, i.e. the child may play with a phone the way it is supposed to be used to show they can use it (recognitive), and in symbolic or imaginative play, they may pretend a banana is the phone.

This is an important step since representation and abstraction are essential in learning language, especially print and alphabetical systems for reading and other discourse. In this sense, play provides a transitional stage in this direction whenever an object (for example a stick) becomes a pivot for severing meaning of horse from a real horse. The child cannot yet detach thought from object. (Vygotsky, 1976, p 97). For Vygotsky, play represented a transition in comprehension and problem solving –where the child moved from external processing — imagination in action —to internal processing — imagination as play without action.

In my own work, I have studied the play of school children and adults as learning activities. This research has informed my work in classroom instruction and game design. Learning activities can be structured as a game, extending the opportunity to learn content, and extend the context of the game into other aspects of the learner’s life, providing performance data and allowing for self-improvement with feedback, and data collection that is assessed, measured and evaluated for policy.

My research and publications have been informed by my work as a tenured teacher and software developer. A key feature of my work is the importance of designing for learning transfer and construct validity. When I design a learning environment, I do so with research in mind. Action research allows for reflection and analysis of what I created, what the learners experienced, and an opportunity to build theory. What is unique about what I do is the systems approach and the way I reverse engineer play as a deep and effective learning tool into transformative learning, where pleasurable activities can be counted as learning.

Although I have published using a wide variety of methodologies, cognitive ethnography is a methodology typically associated with distributed cognition, and examines how communities contain varying levels of competence and expertise, and how they may imbue that knowledge in objects. I have used it specifically on game and play analysis (Dubbels 2008, 2011). This involves observation and analysis of Space or Context—specifically conceptual space, physical space, and social space. The cognitive ethnographer transforms observational data and interpretation of space into meaningful representations so that cognitive properties of the system become visible (Hutchins, 2010; 1995). Cognitive ethnography seeks to understand cognitive process and context—examining them together, thus, eliminating the false dichotomy between psychology and anthropology. This can be very effective for building theories of learning while being accessible to educators.

My current interest is in the use of cognitive ethnographic methodology with traditional form serves as an opportunity to move between inductive and deductive inquiry and observation to build a Nomological network (Cronbach & Meehl, 1955) using measures and quantified observations with the Multiple Trait and Multiple Method Matrix Analysis (Campbell & Fiske, 1959) for construct validity (Cook & Campbell, 1979; Campbell & Stanley, 1966) especially in relation to comprehension and problem solving based upon the Event Indexing Model (Zwaan & Radvansky, 1998).

We distribute knowledge because it is impossible for a single human being, or even a group to have mastery of all knowledge and all skills (Levy, 1997). For this reason I study access and quality of collective group relations and objects and the resulting comprehension and problem solving. The use of these objects and relations can scaffold learners and inform our understanding of how perceptual knowledge is internalized and transformed into conceptual knowledge through learning and experience.

Specialties

Educational research in cognitive psychology, social learning. identity, curriculum and instruction, game design, theories of play and learning, assessment, instructional design, and technology innovation.

Additionally:

The convergence of media technologies now allow for collection, display, creation, and broadcast of information as narrative, image, and data. This convergence of function makes two ideas important in the study of learning:

- The ability to create of media communication through narrative, image, and data analysis and information graphics is becoming more accessible to non-experts through media appliances such as phones, tablets, game consoles and personal computers.

- These media appliances have taken very complex behaviors such as film production, which in the past required teams of people with special skill and knowledge, and have imbued these skills and knowledge in hand-held devices that are easy to use, and are available to the general population.

- This accessibility allows novices to learn complex media production, analysis, and broadcast, and allows for the study of these devices as object that has been imbued with the knowledge and skill, as externalized cognitionThrough the use of these devices, the general population may learn complex skills and knowledge that may have required years of specialized training in the past. Study of the interaction between of individuals learning to use these appliances and devices can be studied as a progression of internalizing knowledge and skill imbued in objects.

- The convergence of media technologies into small, single–even handheld—devices emphasizes that technology for producing media may change, but the narrative has remained relatively consistent.

- This consistency of media as narrative, imagery, and data analysis emphasizes the importance of the continued study of narrative comprehension and problem solving through the use of these media appliances.

I am concerned that Gamefication obfuscates the real issue in learning:

Transfer.

Is there evidence that game-based learning leads to far transfer?

Without learning transfer, it doesn’t matter how a person learned–whether from a piece of software, watching an expert, rote memorization, or the back of cereal box. What is important is that learning occurred, and how we know that learning occurred.

This leads to issues of assessment and evaluation.

Transfer and Games:Â How do we assess this?

The typical response from gameficators is that assessment does not measure the complex learning from games. I have been there and said that. Â But I have learned that is overly dismissive of assessment and utterly simplistic. As I investigated assessment and psychometrics, I have learned how simplistic were my statements. Games themselves are assessment tools, and I learned that by learning about assessment.

This Gameficator–(yes I am now going to 3rd person)–had to seek knowledge beyond his ludic and narratological powers. He had to learn the mysteries and great lore of psychometrics, instructional design, and educational psychology. It was through this journey into the dark arts, that he has been able to overcome some of the traps of Captain Obvious, and his insidious powers to sidetrack and obfuscate through jargonification,and worse–like taking credit for previously documented phenomena by imposing a new name. . . kind of like my renaming Canada to the now improved, muchmorebetter name: Candyland.

In reality, I had to learn the language and content domain of learning to be an effective instructional designer, just as children learn the language and content domains of science, literature, math, and civics.

What I have learned through my journey, is that if a learner cannot explain a concept, that this inability to explain and demonstrate may be an indicator that the learning from the game experience is not crystallized–that the learner does not have a conceptual understanding that can be explained or expressed by that learner.

Do games deliver this?

Does gamefied learning deliver this?

Gameficators need to address this if this is to be more than a captivating trend.

I am fearful that the good that comes from this trend will not matter if we are only creating  jargonification (even if it is more accessible). It is not enough to redescribe an established instructional design technique if we cannot demonstrate its effectiveness.  We should be asking how learning in game-like contexts enhances learning and how to measure that.

Measurement and testing are important because well-designed tests and measures can deliver an assessment of crystallized knowledge–does the learner have a chunked conceptual understanding of a concept, such as “resistance”, “average”, “setting”?

Measurement and testing are important because well-designed tests and measures can deliver an assessment of crystallized knowledge–does the learner have a chunked conceptual understanding of a concept, such as “resistance”, “average”, “setting”?

Sure, students may have a gist understanding of a concept. The teacher may even see this qualitatively, but the student cannot express it in a test. So is that comprehension? I think not. The point of the test is an un-scaffolded demonstration of comprehension.

Maybe the game player has demonstrated the concept of “resistance” in a game by choosing a boat with less resistance to go faster in the game space– but this is not evidence that they understand the term conceptually. This example from a game may be an important aspect of perceptual learning, that may lead to the eventual ability to explain (conceptual learning); but it is not evidence of comprehension of “resistance” as a concept.

So when we look at game based learning, we should be asking what it does best, not whether it is better. Games can become a type of assessment where contextual knowledge is demonstrated. But we need to go beyond perceptual knowledge–reacting correctly in context and across contexts–to conceptual knowledge: the ability to explain and demonstrate a concept.

My feeling is that one should be skeptical of Gameficators that pontificate without pointing to the traditions in educational research. If Gamefication advocates want to influence education and assessment, they should attempt to learn the history, and provide evidential differences from the established terms they seek  to replace.

A darker shadow is cast

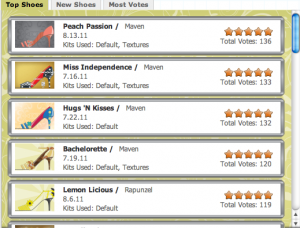

Another concern I have is that  perhaps not all gameficators are created equal. Maybe some gameficators are not really advocatingfor the game elements that are really fundamental aspects of games? Maybe there are people with no ludic-narratological powers donning the cape of gamefication! I must ask, is this leaderboard what makes for “gameness”? Or does a completion task-bar make for gamey experience?

Maybe there are people with no ludic-narratological powers donning the cape of gamefication! I must ask, is this leaderboard what makes for “gameness”? Or does a completion task-bar make for gamey experience?

Does this leaderboard for shoe preference tell us anything but shoe preference? Where is the game?

Are there some hidden game mechanics that I am failing to apprehend?

Is this just villainy?

Here are a couple of ideas that echo my own concerns:

It seems to me, that:

- some of the elements of gamefication rely heavily on aspects of games that are not new, just rebranded.

- some of the elements of gamefication aren’t really “gamey”.

What I like about games is that games can provide multimodal experiences, and provide context and prior knowledge without the interference of years of practice-this is new. Games accelerate learning by reducing the some of the gatekeeping issues. Now a person without the strength, dexterity and madness can share in some of the experience of riding skateboard off a ramp.  The benefit of games may be in the increased richness of a virtual interactive experience,  and provide the immediate task competencies without the time required to become competent. Games provide a protocol, expedite, and scalffold players toward a fidelity of conceptual experience.

For example, in RIDE, the player is immediately able to do tricks that require many years of practice because the game exaggerates the fidelity of experience. Â This cuts the time it might take to develop conceptual knowledge but reducing the the necessity of coordination, balance, dexterity and insanity to ride a skateboard up a ramp and do a trick in the air and then land unscathed.

Gameficators might embrace this notion.

Games can enhance learning in a classroom, and enhance what is already called experiential learning.

My point:

words, terms, concepts, and domain praxis matter.

Additionally, we seem to be missing the BIG IDEA:

- that the way we structure problems is likely predictive of a successful solutionHerb Simon expressed the idea in his book The Sciences of the Artificial this way:

“solving a problem simply means representing it so as to make the solution transparent.” (1981: p. 153)

Games can help structure problems.

But do they help learners understand how to structure a problem. Do they deliver conceptualized knowledge? Common vocabulary indicative of a content domain?

The issue is really about What Games are Good at Doing, not whether they are better than some other traditional form of instruction.

Do they help us learn how to learn? Do they lead to crystallized conceptual knowledge that is found in the use of common vocabulary? In physics class, when we mention “resistance”, students should offer more than

“Resistance is futile”

or, a correct but non-applicable answer such as “an unwillingness to comply”.

The key is that the student can express the expected knowledge of the content domain in relation to the word. This is often what we test for. So how do games lead to this outcome?

I am not hear to give gameficators a hard time–I have a gamefication badge (self-created)–but I would like to know that when my learning is gamefied that the gameficator had some knowledge from the last century of instructional design and learning research, just as I want my physics student to know that resistance as an operationalized concept in science, not a popular cultural saying from the BORG.

The concerns expressed here are applicable in most learning contexts. But if we are going to advocate the use games, then perhaps we should look at how good games are effective, as well as how good lessons are structured. Perhaps more importantly,

- we may need to examine our prevalent misunderstandings about learning assessment;

- perhaps explore the big ideas and lessons that come from years educational psychology research, rather than just renaming and creating new platform without realizing or acknowledging, that current ideas in gamefication stand on the established shoulders of giants from a century of educational research.

Jargonification is a big concern. So lets make our words matter and also look back as we look forward. Games are potentially powerful tools for learning, but not all may be effective in every purpose or context. What does the research say?

I am hopeful gamefication delivers a closer look at how game-like instructional design may enhance successful learning.