CFP Special Issue On: SURGE, Physics Games, and the Role of Design

Submission Due Date

5/15/2017

Guest Editors

Douglas Clark, Vanderbilt University, Nashville, TN, USA

Introduction

The purpose of this special issue is to investigate the role of design in the efficacy of physics games in terms of what is learned, by whom, and how. Importantly, studies should move beyond basic media comparisons (e.g., game versus non-game) to instead focus on the role of design and specifics about players’ learning processes. Thus, invoking the terminology proposed by Richard Mayer (2011), the focus should be on value-added and cognitive consequences approaches rather than media comparison approaches. Note that a broad range of research methodologies including a full gamut of qualitative, ethnographic, and microgenetic methodologies are encouraged as well as quantitative and data-mining perspectives. Furthermore, the focal outcomes and design qualities analyzed can span the range of functional, emotional, transformational, and social value elements outlined by Almquist, Senior, and Bloch (2016).

Recommended Topics

Authors are invited to submit manuscripts that

- Focus on the role of design beyond simple medium (i.e., move beyond simple of tests of whether physics games can support learning to instead focus on how the design of the game, learning environment, and social setting influence what is learned, by whom, and how).

- Explore learning in games from the SURGE constellation of physics games and other physics games using qualitative, mixed, design-based research, quantitative, data-mining, or other methodologies.

- Focus on formal, recreational, and/or informal learning settings.

- Focus on any combination of player, student, teacher, designer, and/or any of other participants.

- Answer specific questions such as:

- How do specific approaches to integrating learning constructs from educational psychology (e.g., work examples, signaling, self-explanation) impact the efficacy of these approaches within digital physics games for learning?

- How do elements of design impact the value experienced by players in terms of the elements of functional, emotional, transformational, and social value outlined by Almquist, Senior, and Bloch (2016)?

- What is the role of the teacher in interaction with students and the design of a game in terms of learning outcomes?

- How does game design interact with gender in terms of what is learned, by whom, and how?

- How can designers balance learning goals and game-play goals to best support a diverse range of players and learners?

- How do specific sets of design features interact with players’ learning processes and game-play goals?

Submission Procedure

Potential authors are encouraged to contact Douglas Clark (clark@vanderbilt.edu) to ask about the appropriateness of their topic.

Authors should submit their manuscripts to the submission system using the link at the bottom of the call (Please note authors will need to create a member profile in order to upload a manuscript.).

Manuscripts should be submitted in APA format.

They will typically be 5000-12000 words in length.

Full submission guidelines can be found at: http://www.igi-global.com/publish/contributor-resources/before-you-write/

All submissions and inquiries should be directed to the attention of:

Douglas Clark

Guest Editor

International Journal of Gaming and Computer-Mediated Simulations (IJGCMS)

Email: clark@vanderbilt.edu

Can UX research take a game that is not fun, and make it more fun?

CFP: UX — What is User Experience in Video Games?

The purpose of this special issue is to investigate the nature of video game UX.

ISO 9241-210[1] defines user experience as “a person’s perceptions and responses that result from the use or anticipated use of a product, system or service”. According to the ISO definition, user experience includes all the users’ emotions, beliefs, preferences, perceptions, physical and psychological responses, behaviors and accomplishments that occur before, during and after use. The ISO also list three factors that influence user experience: system, user and the context of use.

In this issue we hope to present practitioners and academic perspectives through presenting a broad range of user experience evaluation methods and concepts; application of various user experience evaluation methods; how UX fits into video game development cycle; methods of evaluating user experience during game play and after; and social play.

Authors are invited to submit manuscripts that

- Present empirical findings on UX in game development

- Push the theoretical knowledge of UX

- Conduct meta-analyses of existing research on UX

- Answer specific questions such as:

- Case studies, worked examples, empirical and phenomenological, application of psychological and humanist approaches?

- Field research

- Universal Access

- Face to face interviewing

- Creation of user tests

- Gathering and organizing statistics

- Define Audience

- User scenarios

- Creating Personas

- Product design

- Feature writing

- Requirement writing

- Content surveys

- Graphic Arts

- Interaction design

- Information architecture

- Process flows

- Usability

- Prototype development

- Interface layout and design

- Wire frames

- Visual design

- Taxonomy and terminology creation

- Copywriting

- Working with programmers and SMEs

- Brainstorm and managing scope (requirement) creep

- Design and UX culture

- What is the difference between user experience and usability?

- How does UX research extend beyond examination of the UI? Should we differentiate pragmatic and hedonic aspects of the game?

- Who is a User Experience researcher, what do they do, and how does one become one?

- What are the methodologies?

Potential authors are encouraged to contact Brock Dubbels (Dubbels@mcmaster.ca) to ask about the appropriateness of their topic.

Deadline for Submission January 2014.

Authors should submit their manuscripts to the submission system using the following link:

http://www.igi-global.com/authorseditors/titlesubmission/newproject.aspx

(Please note authors will need to create a member profile in order to upload a manuscript.)

Manuscripts should be submitted in APA format.

They will typically be 5000-8000 words in length.

Full submission guidelines can be found at: http://www.igi-global.com/journals/guidelines-for-submission.aspx

Mission – IJGCMS is a peer-reviewed, international journal devoted to the theoretical and empirical understanding of electronic games and computer-mediated simulations. IJGCMS publishes research articles, theoretical critiques, and book reviews related to the development and evaluation of games and computer-mediated simulations. One main goal of this peer-reviewed, international journal is to promote a deep conceptual and empirical understanding of the roles of electronic games and computer-mediated simulations across multiple disciplines. A second goal is to help build a significant bridge between research and practice on electronic gaming and simulations, supporting the work of researchers, practitioners, and policymakers.

Gamiceuticals: Video Games for medical diagnosis, treatment,

and professional development

Should games and play be used to diagnose or treat a medical condition? Can video games provide professional development for health professionals? To gather medical data? To provide adherence and behavioral change? Or even become a part of our productivity at work? In this presentation psychological research will be presented to make a case for how games are currently, and potentially, can be used in the eHealth and medical sector.

Join MacGDA for a talk with Brock Dubbels on issues related to games, health, and psychology.

Sign up at http://gme.eventbrite.ca/

Sign up at http://gme.eventbrite.ca/

Brock Dubbels is an experimental psychologist at the G-Scale Game development and testing laboratory at McMaster University in Hamilton, Ontario. His appointment includes work in the Dept. of Computing and Software (G-Scale) and the McMaster Research Libraries. Brock specializes in games and software for knowledge and skill acquisition, eHealth, and clinical interventions.

Brock Dubbels has worked since 1999 as a professional in education and instructional design. His specialties include comprehension, problem solving, and game design. From these perspectives he designs face-to-face, virtual, and hybrid learning environments, exploring new technologies for assessment, delivering content, creating engagement with learners, and investigating ways people approach learning. He has worked as a Fulbright Scholar at the Norwegian Institute of Science and Technology; at Xerox PARC and Oracle, and as a research associate at the Center for Cognitive Science at the University of Minnesota. He teaches course work on games and cognition, and how learning research can improve game design for return on investment (ROI). He is also the founder and principal learning architect at www.vgalt.com for design, production, usability assessment and evaluation of learning systems and games.

Join the MacMaster Game Development Association: http://macgda.com/

What we cannot know or do individually, we may be capable of collectively.

My research examines the transformation of perceptual knowledge into conceptual knowledge. Conceptual knowledge can be viewed as crystallized, which means that it has become abstracted and is often symbolized in ways that do not make the associated meaning obvious. Crystallized knowledge is the outcome of fluid intelligence, or the ability to think logically and solve problems in novel situations, independent of acquired knowledge. I investigate how groups and objects may assist in crystallization of knowledge, or the construction of conceptual understanding.

I am currently approaching this problem from the perspective that cognition is externalized and extended through objects and relationships. Â This view posits that skill, competence, knowledge are learned through interaction aided with objects imbued with collective knowledge.

Groups make specialized information available through objects and relationships so that individual members can coordinate their actions and do things that would be hard or impossible for them to enact individually. To examine this, I use a socio-cognitive approach, which views cognition as distributed, where information processing is imbued in objects and communities and aids learners in problem solving.

This socio-cognitive approach is commonly associated with cognitive ethnography and the study of social networks. In particular, I have special interest in how play, games, modeling, and simulations can be used to enhance comprehension and problem solving through providing interactive learning. In my initial observational studies, I have found that games are structured forms of play, which work on a continuum of complexity:

- Pretense, imagery and visualization of micro worlds

- Tools, rules, and roles

- Branching / probability

Games hold communal knowledge, which can be learned through game play. An example of this comes from the board game Ticket to Ride. In this strategy game players take on the role of a railroad tycoon in the early 1900′s. The goal is to build an empire that spans the United States while making shrewd moves that block your opponents from being able to complete their freight and passenger runs to various cities. Game play scaffolds the learner in the history and implications of early transportation through taking on the role of an entrepreneur and learning the context and process of building up a railroad empire. In the course of the game, concept are introduced, with language, and value systems based upon the problem space created by the game mechanics (artifacts, scoring, rules, and language). The game can be analyzed as a cultural artifact containing historical information; a vehicle for content delivery as a curriculum tool; as well as an intervention for studying player knowledge and decision-making.

I have observed that learners interact with games with growing complexity of the game as a system. As the player gains top sight, a view of the whole system, they play with greater awareness of the economy of resources, and in some cases an aesthetic of play. For beginning players, I have observed the following progression:

- Trial and error – forming a mental representation, or situation model of how the roles, rules, tools, and contexts work for problem solving.

- Tactical trials – a successful tactic is generated to solve problems using the tools, rules, roles, and contexts. This tactic may be modified for use in a variety of ways as goals and context change in the game play.

- Strategies—the range of tactics of resulted in strategies that come from a theory of how the game works. This approach to problem solving indicates a growing awareness of systems knowledge, the purpose or criteria for winning, and is a step towards top sight. They understand that there are decision branches, and each decision branch comes with risk reward they can evaluate in the context of economizing resources.

- Layered strategies—the player is now making choices based upon managing resources because they are now economizing resources and playing for optimal success with a well-developed mental representation of the games criteria for winning, and how to have a high score rather than just finish.

- Aesthetic of play—the player understands the system and has learned to use and exploit ambiguities in the rules and environment to play with an aesthetic that sets the player apart from others. The game play is characterized with surprising solutions to the problem space.

For me, games are a structured form of play. As an example, a game may playfully represent an action with associated knowledge, such as becoming a railroad tycoon, driving a high performance racecar, or even raising a family. Games always involve contingent decision-making, forcing the players to learn and interact with cultural knowledge simulated in the game.

Games currently take a significant investment of time and effort to collectively construct. These objects follow in a history of collective construction by groups and communities. Consider the cartography and the creation of a map as an example of collective distributed knowledge imbued in an object. Â According to Hutchins (1996),

“A navigation chart represents the accumulation of more observations than any one person could make in a lifetime. It is an artifact that embodies generations of experience and measurement. No navigator has ever had,nor will one ever have, all the knowledge that is in the chart.â€

A single individual can use a map to navigate an area with competence, if not expertise. Observing an individual learning to use a map, or even construct one is instructive for learning about comprehension and decision-making. Interestingly, games provide structure to play, just as maps and media appliances provide structure to data to create information. Objects such as maps and games are examples of collective knowledge, and are what Vygotsky termed a pivot.

The term pivot was initially conceptualized in describing children’s play, particularly as a toy. A toy is a representation used in aiding knowledge construction in early childhood development. This is the transition where children may move from recognitive play to symbolic and imaginative play, i.e. the child may play with a phone the way it is supposed to be used to show they can use it (recognitive), and in symbolic or imaginative play, they may pretend a banana is the phone.

This is an important step since representation and abstraction are essential in learning language, especially print and alphabetical systems for reading and other discourse. In this sense, play provides a transitional stage in this direction whenever an object (for example a stick) becomes a pivot for severing meaning of horse from a real horse. The child cannot yet detach thought from object. (Vygotsky, 1976, p 97). For Vygotsky, play represented a transition in comprehension and problem solving –where the child moved from external processing — imagination in action —to internal processing — imagination as play without action.

In my own work, I have studied the play of school children and adults as learning activities. This research has informed my work in classroom instruction and game design. Learning activities can be structured as a game, extending the opportunity to learn content, and extend the context of the game into other aspects of the learner’s life, providing performance data and allowing for self-improvement with feedback, and data collection that is assessed, measured and evaluated for policy.

My research and publications have been informed by my work as a tenured teacher and software developer. A key feature of my work is the importance of designing for learning transfer and construct validity. When I design a learning environment, I do so with research in mind. Action research allows for reflection and analysis of what I created, what the learners experienced, and an opportunity to build theory. What is unique about what I do is the systems approach and the way I reverse engineer play as a deep and effective learning tool into transformative learning, where pleasurable activities can be counted as learning.

Although I have published using a wide variety of methodologies, cognitive ethnography is a methodology typically associated with distributed cognition, and examines how communities contain varying levels of competence and expertise, and how they may imbue that knowledge in objects. I have used it specifically on game and play analysis (Dubbels 2008, 2011). This involves observation and analysis of Space or Context—specifically conceptual space, physical space, and social space. The cognitive ethnographer transforms observational data and interpretation of space into meaningful representations so that cognitive properties of the system become visible (Hutchins, 2010; 1995). Cognitive ethnography seeks to understand cognitive process and context—examining them together, thus, eliminating the false dichotomy between psychology and anthropology. This can be very effective for building theories of learning while being accessible to educators.

My current interest is in the use of cognitive ethnographic methodology with traditional form serves as an opportunity to move between inductive and deductive inquiry and observation to build a Nomological network (Cronbach & Meehl, 1955) using measures and quantified observations with the Multiple Trait and Multiple Method Matrix Analysis (Campbell & Fiske, 1959) for construct validity (Cook & Campbell, 1979; Campbell & Stanley, 1966) especially in relation to comprehension and problem solving based upon the Event Indexing Model (Zwaan & Radvansky, 1998).

We distribute knowledge because it is impossible for a single human being, or even a group to have mastery of all knowledge and all skills (Levy, 1997). For this reason I study access and quality of collective group relations and objects and the resulting comprehension and problem solving. The use of these objects and relations can scaffold learners and inform our understanding of how perceptual knowledge is internalized and transformed into conceptual knowledge through learning and experience.

Specialties

Educational research in cognitive psychology, social learning. identity, curriculum and instruction, game design, theories of play and learning, assessment, instructional design, and technology innovation.

Additionally:

The convergence of media technologies now allow for collection, display, creation, and broadcast of information as narrative, image, and data. This convergence of function makes two ideas important in the study of learning:

- The ability to create of media communication through narrative, image, and data analysis and information graphics is becoming more accessible to non-experts through media appliances such as phones, tablets, game consoles and personal computers.

- These media appliances have taken very complex behaviors such as film production, which in the past required teams of people with special skill and knowledge, and have imbued these skills and knowledge in hand-held devices that are easy to use, and are available to the general population.

- This accessibility allows novices to learn complex media production, analysis, and broadcast, and allows for the study of these devices as object that has been imbued with the knowledge and skill, as externalized cognitionThrough the use of these devices, the general population may learn complex skills and knowledge that may have required years of specialized training in the past. Study of the interaction between of individuals learning to use these appliances and devices can be studied as a progression of internalizing knowledge and skill imbued in objects.

- The convergence of media technologies into small, single–even handheld—devices emphasizes that technology for producing media may change, but the narrative has remained relatively consistent.

- This consistency of media as narrative, imagery, and data analysis emphasizes the importance of the continued study of narrative comprehension and problem solving through the use of these media appliances.

I am concerned that Gamefication obfuscates the real issue in learning:

Transfer.

Is there evidence that game-based learning leads to far transfer?

Without learning transfer, it doesn’t matter how a person learned–whether from a piece of software, watching an expert, rote memorization, or the back of cereal box. What is important is that learning occurred, and how we know that learning occurred.

This leads to issues of assessment and evaluation.

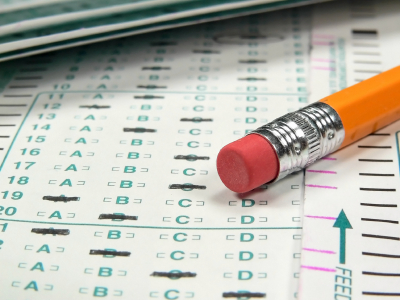

Transfer and Games:Â How do we assess this?

The typical response from gameficators is that assessment does not measure the complex learning from games. I have been there and said that. Â But I have learned that is overly dismissive of assessment and utterly simplistic. As I investigated assessment and psychometrics, I have learned how simplistic were my statements. Games themselves are assessment tools, and I learned that by learning about assessment.

This Gameficator–(yes I am now going to 3rd person)–had to seek knowledge beyond his ludic and narratological powers. He had to learn the mysteries and great lore of psychometrics, instructional design, and educational psychology. It was through this journey into the dark arts, that he has been able to overcome some of the traps of Captain Obvious, and his insidious powers to sidetrack and obfuscate through jargonification,and worse–like taking credit for previously documented phenomena by imposing a new name. . . kind of like my renaming Canada to the now improved, muchmorebetter name: Candyland.

In reality, I had to learn the language and content domain of learning to be an effective instructional designer, just as children learn the language and content domains of science, literature, math, and civics.

What I have learned through my journey, is that if a learner cannot explain a concept, that this inability to explain and demonstrate may be an indicator that the learning from the game experience is not crystallized–that the learner does not have a conceptual understanding that can be explained or expressed by that learner.

Do games deliver this?

Does gamefied learning deliver this?

Gameficators need to address this if this is to be more than a captivating trend.

I am fearful that the good that comes from this trend will not matter if we are only creating  jargonification (even if it is more accessible). It is not enough to redescribe an established instructional design technique if we cannot demonstrate its effectiveness.  We should be asking how learning in game-like contexts enhances learning and how to measure that.

Measurement and testing are important because well-designed tests and measures can deliver an assessment of crystallized knowledge–does the learner have a chunked conceptual understanding of a concept, such as “resistance”, “average”, “setting”?

Measurement and testing are important because well-designed tests and measures can deliver an assessment of crystallized knowledge–does the learner have a chunked conceptual understanding of a concept, such as “resistance”, “average”, “setting”?

Sure, students may have a gist understanding of a concept. The teacher may even see this qualitatively, but the student cannot express it in a test. So is that comprehension? I think not. The point of the test is an un-scaffolded demonstration of comprehension.

Maybe the game player has demonstrated the concept of “resistance” in a game by choosing a boat with less resistance to go faster in the game space– but this is not evidence that they understand the term conceptually. This example from a game may be an important aspect of perceptual learning, that may lead to the eventual ability to explain (conceptual learning); but it is not evidence of comprehension of “resistance” as a concept.

So when we look at game based learning, we should be asking what it does best, not whether it is better. Games can become a type of assessment where contextual knowledge is demonstrated. But we need to go beyond perceptual knowledge–reacting correctly in context and across contexts–to conceptual knowledge: the ability to explain and demonstrate a concept.

My feeling is that one should be skeptical of Gameficators that pontificate without pointing to the traditions in educational research. If Gamefication advocates want to influence education and assessment, they should attempt to learn the history, and provide evidential differences from the established terms they seek  to replace.

A darker shadow is cast

Another concern I have is that  perhaps not all gameficators are created equal. Maybe some gameficators are not really advocatingfor the game elements that are really fundamental aspects of games? Maybe there are people with no ludic-narratological powers donning the cape of gamefication! I must ask, is this leaderboard what makes for “gameness”? Or does a completion task-bar make for gamey experience?

Maybe there are people with no ludic-narratological powers donning the cape of gamefication! I must ask, is this leaderboard what makes for “gameness”? Or does a completion task-bar make for gamey experience?

Does this leaderboard for shoe preference tell us anything but shoe preference? Where is the game?

Are there some hidden game mechanics that I am failing to apprehend?

Is this just villainy?

Here are a couple of ideas that echo my own concerns:

It seems to me, that:

- some of the elements of gamefication rely heavily on aspects of games that are not new, just rebranded.

- some of the elements of gamefication aren’t really “gamey”.

What I like about games is that games can provide multimodal experiences, and provide context and prior knowledge without the interference of years of practice-this is new. Games accelerate learning by reducing the some of the gatekeeping issues. Now a person without the strength, dexterity and madness can share in some of the experience of riding skateboard off a ramp.  The benefit of games may be in the increased richness of a virtual interactive experience,  and provide the immediate task competencies without the time required to become competent. Games provide a protocol, expedite, and scalffold players toward a fidelity of conceptual experience.

For example, in RIDE, the player is immediately able to do tricks that require many years of practice because the game exaggerates the fidelity of experience. Â This cuts the time it might take to develop conceptual knowledge but reducing the the necessity of coordination, balance, dexterity and insanity to ride a skateboard up a ramp and do a trick in the air and then land unscathed.

Gameficators might embrace this notion.

Games can enhance learning in a classroom, and enhance what is already called experiential learning.

My point:

words, terms, concepts, and domain praxis matter.

Additionally, we seem to be missing the BIG IDEA:

- that the way we structure problems is likely predictive of a successful solutionHerb Simon expressed the idea in his book The Sciences of the Artificial this way:

“solving a problem simply means representing it so as to make the solution transparent.” (1981: p. 153)

Games can help structure problems.

But do they help learners understand how to structure a problem. Do they deliver conceptualized knowledge? Common vocabulary indicative of a content domain?

The issue is really about What Games are Good at Doing, not whether they are better than some other traditional form of instruction.

Do they help us learn how to learn? Do they lead to crystallized conceptual knowledge that is found in the use of common vocabulary? In physics class, when we mention “resistance”, students should offer more than

“Resistance is futile”

or, a correct but non-applicable answer such as “an unwillingness to comply”.

The key is that the student can express the expected knowledge of the content domain in relation to the word. This is often what we test for. So how do games lead to this outcome?

I am not hear to give gameficators a hard time–I have a gamefication badge (self-created)–but I would like to know that when my learning is gamefied that the gameficator had some knowledge from the last century of instructional design and learning research, just as I want my physics student to know that resistance as an operationalized concept in science, not a popular cultural saying from the BORG.

The concerns expressed here are applicable in most learning contexts. But if we are going to advocate the use games, then perhaps we should look at how good games are effective, as well as how good lessons are structured. Perhaps more importantly,

- we may need to examine our prevalent misunderstandings about learning assessment;

- perhaps explore the big ideas and lessons that come from years educational psychology research, rather than just renaming and creating new platform without realizing or acknowledging, that current ideas in gamefication stand on the established shoulders of giants from a century of educational research.

Jargonification is a big concern. So lets make our words matter and also look back as we look forward. Games are potentially powerful tools for learning, but not all may be effective in every purpose or context. What does the research say?

I am hopeful gamefication delivers a closer look at how game-like instructional design may enhance successful learning.

I don’t think anyone would disagree — fostering creativity should be a goal of classroom learning.

However, the terms creativity and innovation are often misused. When used they typically imply that REAL learning cannot be measured. Fortunately, we know A LOT about learning and how it happens now. It is measurable and we can design learning environments that promote it. It is the same with creativity as with intelligence–we can promote growth in creativity and intelligence through creative approaches to pedagogy and assessment. Because data-driven instruction does not kill creativity, it should promote it.

One of the ways we might look at creativity and innovation is through the much maligned tradition of intelligence testing as described in the Wikipedia:

Fluid intelligence or fluid reasoning is the capacity to think logically and solve problems in novel situations, independent of acquired knowledge. It is the ability to analyze novel problems, identify patterns and relationships that underpin these problems and the extrapolation of these using logic. It is necessary for all logical problem solving, especially scientific, mathematical and technical problem solving. Fluid reasoning includes inductive reasoning and deductive reasoning, and is predictive of creativity and innovation.

Crystallized intelligence is indicated by a person’s depth and breadth of general knowledge, vocabulary, and the ability to reason using words and numbers. It is the product of educational and cultural experience in interaction with fluid intelligence and also predicts creativity and innovation.

The Myth of Opposites

Creativity and intelligence are not opposites. It takes both for innovation.

What we often lack are creative ways of measuring learning growth in assessments. When we choose to measure growth in summative evaluations and worksheets over and over , we nurture boredom and kill creativity.

To foster creativity, we need to adopt and implement pedagogy and curriculum that promotes creative problems solving, and also provides criteria that can measure creative problem solving.

What is needed are ways to help students learn content in creative ways through the use of creative assessments.

We often confuse the idea of  learning creatively with trial and error and play, free of any kind of assessment–that somehow the Mona Lisa was created through just free play and doodling. That somehow assessment kills creativity.  Assessment provide learning goals.

Without learning criteria, students are left to make sense of the problem put before them with questions like “what do I do now?” (ad infinitum).

The role of the educator is to design problems so that the solution becomes transparent. This is done through providing information about process, outcome, and quality criteria . . . assessment, is how it is to be judged. For example, “for your next assignment, I want a boat that is beautiful and  that is really fast. Here are some examples of boats that are really fast.  Look at the hull, the materials they are made with, etc. and design me a boat that goes very fast and tell me why it goes fast. Tell me why it is beautiful.” Now use the terms from the criteria. What is beautiful? Are you going to define it? How about fast? Fast compared to what? These open-ended, interest-driven, free play assignments might be motivating, but they lead to quick frustration and lots of “what do I do now?”

But play and self-interest arte not the problem here. The problem is the way we are approaching assessment.

Although play is described as a range of voluntary, intrinsically motivated activities normally associated with recreational pleasure and enjoyment; Pleasure and enjoyment still come from judgements about one’s work–just like assessment–whether finger painting or creating a differential equation. The key feature here is that play seems to involve self-evaluation and discovery of key concepts and patterns. Assessments can be constructed to scaffold and extend this, and this same process can be structured in classrooms through assessment criteria.

Every kind of creative play activity has evaluation and self-judgement: the individual is making judgements about pleasure, and often why it is pleasurable. This is often because they want to replicate this pleasure in the future, and oddly enough, learning is pleasurable. So when we teach a pleasurable activity, the learning may be pleasurable. This means chunking the learning and concepts into larger meaning units such as complex terms and concepts, which represent ideas, patterns, objects, and qualities. Thus, crystallized intelligence can be constructed through play as long as the play experience is linked and connected to help the learner to define and comprehend the terms (assessment criteria). So when the learner talks about their boat, perhaps they should be asked to sketch it first, and then use specific terms to explain their design:

Bow is the frontmost part of the hull

Stern is the rear-most part of the hull

Port is the left side of the boat when facing the Bow

Starboard is the right side of the boat when facing the Bow

Waterline is an imaginary line circumscribing the hull that matches the surface of the water when the hull is not moving.

Midships is the midpoint of the LWL (see below). It is half-way from the forwardmost point on the waterline to the rear-most point on the waterline.

Baseline an imaginary reference line used to measure vertical distances from. It is usually located at the bottom of the hull

Along with the learning activity and targeted learning criteria and content, the student should be asked a guiding question to help structure their description.

So, how do these parts affect the performance of the whole?

Additionally, the learner should be adopting the language (criteria) from the rubric to build comprehension. Taking perception, experience, similarities and contrasts to understand Bow and Stern, or even Beauty.

Experiential Learning for Fluidity and Crystallization

What the tradition of intelligence offers is an insight as to how an educator might support students. What we know is that intelligence is not innate. It can change through learning opportunities. The goal of the teacher should be to provide experiential learning that extends Fluid Intelligence, through developing problem solving, and link this process to crystallized concepts in vocabulary terms that encapsulate complex process, ideas, and description.

The real technology in a 21st Century Classroom is in the presentation and collection of information. It is the art of designing assessment for data-driven decision making. The role of the teacher should be in grounding crystallized academic concepts in experiential learning with assessments the provide structure for creative problem solving. The teacher creates assessments where the learning is the assessment. The learner is scaffolded through the activity with guidance of assessment criteria.

A rubric, which provides criteria for quality and excellence can scaffold creativity innovation, and content learning simultaneously. A well-conceived assessment guides students to understand descriptions of quality and help students to understand crystallized concepts.

An example of a criteria-driven assessment looks like this:

| Purpose & Plan | Isometric Sketch | Vocabulary | Explanation | |

| Level up | Has identified event and hull design with reasoning for appropriateness. | Has drawn a sketch where length, width, and height are represented by lines 120 degrees apart, with all measurements in the same scale. | Understanding is clear from the use of five key terms from the word wall to describe how and why the boat hull design will be successful for the chosen event. | Clear connection between the hull design, event, sketch, and important terms from word wall and next steps for building a prototype and testing. |

| Approaching | Has chosen a hull that is appropriate for event but cannot connect the two. | Has drawn Has drawn a sketch where length, width, and height are represented. | Uses five key terms but struggles to demonstrate understanding of the terms in usage. | Describes design elements, but cannot make the connection of how they work together. |

| Do it again | Has chosen a hull design but it may not be appropriate for the event. | Has drawn a sketch but it does not have length, width, and height represented. | Does not use five terms from word wall. | Struggles to make a clear connection between design conceptual design stage elements. |

What is important about this rubric is that it guides the learner in understanding quality and assessment. It also familiarizes the learner with key crystallized concepts as part of the assessment descriptions. In order to be successful in this playful, experiential activity (boat building),  the learner must learn to comprehend and demonstrate knowledge of the vocabulary scattered throughout the rubric such as: isometric, reasoning, etc. This connection to complex terminology grounded with experience is what builds knowledge and competence. When an educator can coach a student connecting their experiential learning with the assessment criteria, they construct crystallized intelligence through grounding the concept in experiential learning, and potentially expand fluid intelligence through awareness of new patterns in form and structure.

Play is Learning, Learning is Measurable

Just because someone plays, or explores does not mean this learning is immeasurable. The truth is, research on creative breakthroughs demonstrate that authors of great innovation learned through years of dedicated practice and were often judged, assessed, and evaluated.  This feedback from their teachers led them to new understanding and new heights. Great innovators often developed crystallized concepts that resulted from experience in developing fluid intelligence. This can come from copying the genius of others by replicating their breakthroughs; it comes from repetition and making basic skills automatic, so that they could explore the larger patterns resulting from their actions. It was the result of repetition and exploration, where they could reason, experiment, and experience without thinking about the mechanics of their actions.  This meant learning the content and skills from the knowledge domain and developing some level of automaticity. What sets an innovator apart it seems, is tenacity and being playful in their work, and working hard at their play.

According to Thomas Edison:

During all those years of experimentation and research, I never once made a discovery. All my work was deductive, and the results I achieved were those of invention, pure and simple. I would construct a theory and work on its lines until I found it was untenable. Then it would be discarded at once and another theory evolved. This was the only possible way for me to work out the problem. … I speak without exaggeration when I say that I have constructed 3,000 different theories in connection with the electric light, each one of them reasonable and apparently likely to be true. Yet only in two cases did my experiments prove the truth of my theory. My chief difficulty was in constructing the carbon filament. . . . Every quarter of the globe was ransacked by my agents, and all sorts of the queerest materials used, until finally the shred of bamboo, now utilized by us, was settled upon.

On his years of research in developing the electric light bulb, as quoted in “Talks with Edison” by George Parsons Lathrop in Harpers magazine, Vol. 80 (February 1890), p. 425

So when we encourage kids to be creative, we must also understand the importance of all the content and practice necessary to creatively breakthrough. Edison was taught how to be methodical, critical, and observant. He understood the known patterns and made variations. It is important to know the known forms to know the importance of breaking forms. This may inv0lve copying someone else’s design or ideas. Thomas Edison also speaks to this when he said:

Everyone steals in commerce and industry. I have stolen a lot myself. But at least I know how to steal.

Edison stole ideas from others, (just as Watson and Crick were accused of doing). The point Watson seems to be making here is that he knew how to steal, meaning, he saw how the parts fit together. He may have taken ideas from a variety of places, but he had the knowledge, skill, and vision to put them together. This synthesis of ideas took awareness of the problem, the outcome, and how things might work. Lots and lots of experience and practice.

To attain this level of knowledge and experience, perhaps stealing ideas, or copying and imitation are not a bad idea for classroom learning? However copying someone else in school is viewed as cheating rather than a starting point. Perhaps instead, we can take the criteria of examples and design classroom problems in ways that allow discovery and the replication of prior findings (the basis of scientific laws). It is often said that imitation is the greatest form of flattery. Imitation is also one of the ways we learn. In the tradition of play research, mimesis is imitation–Aristotle held that it was “simulated representation”.

The Role of Play and Games

In close, my hope is that we not use the terms “creativity” and “innovation” as suitcase words to diminish such things as minimum standards. We need minimum standards.

But when we talk about teaching for creativity and innovation, where we need to start is the way that we gather data for assessment. Often assessments are unimaginative in themselves. They are applied in ways that distract from learning, because they have become the learning. One of the worst outcomes of this practice is that students believe that they are knowledgeable after passing a minimum standards test. This is the soft-bigotry of low expectation. Assessment should be adaptive, criteria driven, and modeled as a continuous improvement cycle.

This does not mean that we must  drill and kill kids in grinding mindless repetition. Kids will grind towards a larger goal where they are offered feedback on their progress. They do it in games.

Games are structured forms of play. They are criteria driven, and by their very nature, games assess, measure, and evaluate. But they are only as good as their assessment criteria.

These concepts should be embedded in creative active inquiry that will allow the student to embody their learning and memory. However, many of the creative, inquiry-based lessons I have observed tend to ignore the focus of academic language–the crystallized concepts. Such as, “what is fast?”, “what is beauty”, Â “what is balance?”, or “what is conflict?” The focus seems to be on interacting with content rather than building and chunking the concepts with experience. When Plato describes the world of forms, and wants us to understand the essence of the chair, i.e., “what is chairness?” We may have to look at a lot of chairs to understand chairness. Â Bu this is how we build conceptual knowledge, and should be considered when constructing curriculum and assessment. A guiding curricular question should be:

How does the experience inform the concepts in the lesson?

There is a way to use data-driven instruction in very creative lessons, just like the very unimaginative drill and kill approach. Teachers and assessment coordinators need to take the leap and learn to use data collection in creative ways in constructive assignments that promote experiential learning with crystallized academic concepts.

If you have kids make a diorama of a story, have them use the concepts that are part of the standards and testing: Plot, Character, Theme, Setting, ETC. Make them demonstrate and explain. If you want kids to learn the physics have them make a boat and connect the terms through discovery. Use their inductive learning and guide them to conceptual understanding.This can be done through the use of informative assessments, such as with rubrics and scales for assessment.  Evaluation and creativity are not contradictory or mutually exclusive. These seeming opposites are complementary, and can be achieved through embedding the crystallized, higher order concepts into meaningful work.

This monograph describes cognitive ethnography as a method of choice for game studies, multimedia learning, professional development, leisure studies, and activities where context is important. Cognitive ethnography is efficacious for these activities as it  assumes that human cognition adapts to its natural surroundings (Hutchins, 2010; 1995) with emphasis on analysis of activities as they happen in context; how they are represented; and how they are distributed and experienced in space. Along with this, the methodology is described for increasing construct validity (Cook and Campbell, 1979; Campbell & Stanley, 1966) and the creation of a nomological network Cronbach & Meehl (1955). This description of the methodology is contextualized with a study examining the literate practices of reluctant middle school readers playing video games (Dubbels, 2008). The study utilizes variables from empirical laboratory research on discourse processing (Zwann, Langston, & Graesser, 1996) to analyze the narrative discourse of a video game as a socio-cognitive practice (Gee, 2007; Gee, Hull, & Lankshear, 1996).

This monograph describes cognitive ethnography as a method of choice for game studies, multimedia learning, professional development, leisure studies, and activities where context is important. Cognitive ethnography is efficacious for these activities as it  assumes that human cognition adapts to its natural surroundings (Hutchins, 2010; 1995) with emphasis on analysis of activities as they happen in context; how they are represented; and how they are distributed and experienced in space. Along with this, the methodology is described for increasing construct validity (Cook and Campbell, 1979; Campbell & Stanley, 1966) and the creation of a nomological network Cronbach & Meehl (1955). This description of the methodology is contextualized with a study examining the literate practices of reluctant middle school readers playing video games (Dubbels, 2008). The study utilizes variables from empirical laboratory research on discourse processing (Zwann, Langston, & Graesser, 1996) to analyze the narrative discourse of a video game as a socio-cognitive practice (Gee, 2007; Gee, Hull, & Lankshear, 1996).

Keywords:

Cognitive Ethnography, Methodology, Design, Game Studies, Validity, Comprehension, Discourse Processing, Reading, Literacy, Socio-Cognitive.

INTRODUCTION

As a methodological approach, cognitive ethnography assumes that cognition is distributed through rules, roles, language, relationships and coordinated activities, and can be embodied in artifacts and objects (Dubbels, 2008). For this reason, cognitive ethnography is an effective way to study activity systems like games, models, and simulations –whether mediated digitally or not.

BACKGROUND

In its traditional form, ethnography often involves the researcher living in the community of study, learning the language, doing what members of the community do—learning to see the world as it is seen by the natives in their cultural context, Fetterman (1998).

Cognitive ethnography follows the same protocol, but its purpose is to understand cognitive process and context—examining them together, thus, eliminating the false dichotomy between psychology and anthropology.

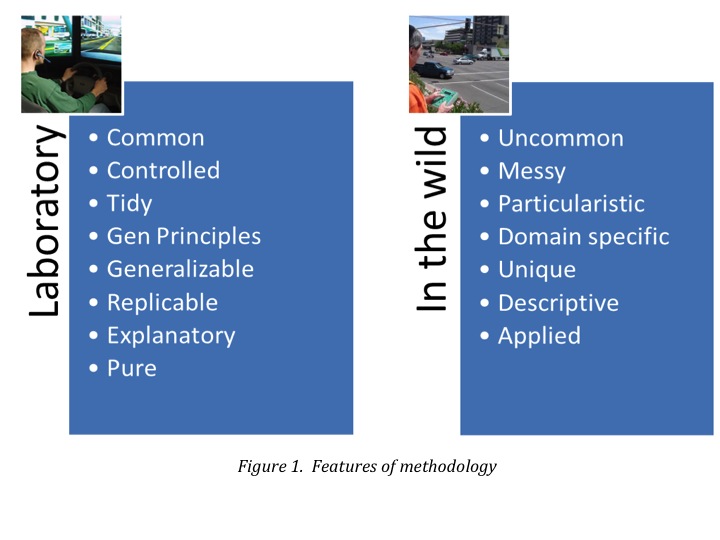

Observational techniques such as ethnography and cognitive ethnography attempt to describe and look at relations and interaction situated in the spaces where they are native. There are a number of advantages to both laboratory observation and in the wild as presented in Figure 1.

NOMOLOGICAL NETWORK

As mentioned, Cognitive Ethnography can be used as an attempt to provide evidence of construct validity. This approach, developed by Cronbach & Meehl (1955), posits that a researcher should provide a theoretical framework for what is being measured, an empirical framework for how it is to be measured, and specification of the linkage between these two frameworks. The idea is to link the conceptual/theoretical with the observable and examine the extent to which a construct, such as comprehension, behaves as it was expected to within a set of related constructs. One should attempt to demonstrate convergent validity by showing that measures that are theoretically supposed to be highly interrelated are, in practice, highly interrelated, and, that measures that shouldn’t be related to each other in fact are not.

This approach, the Nomological network is intended to increase construct validity, and external validity, as will be used in the example, the generalization from one study context, such as the laboratory, to another context, i.e., people, places, times. When we claim construct validity, we are essentially claiming that our observed pattern — how things operate in reality — corresponds with our theoretical pattern — how we think the world works. To do this, it is important to move outside of laboratory settings to observe the complex ways in which individuals and groups adapt to naturally occurring, culturally constituted activities.  By extending theory building with different approaches to research questions, and move from contexts observed in the wild, then refined in the laboratory, and then used as a lens in field observation.

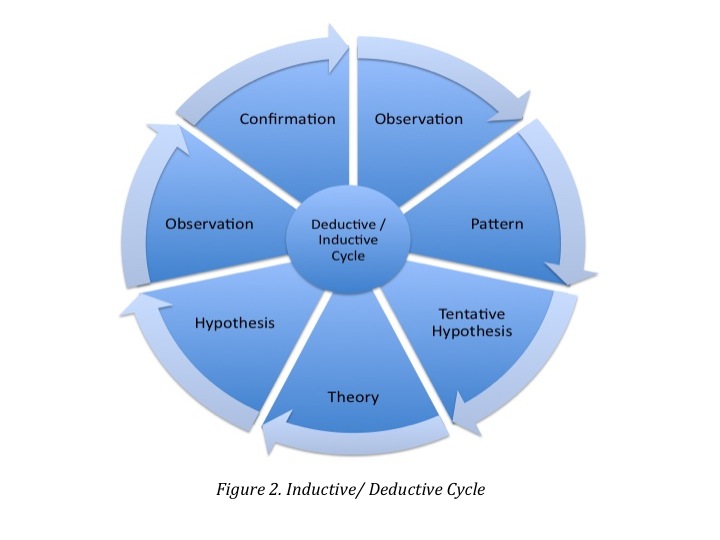

The pattern fits deductive/ inductive framework:

- Deductive: theory, hypothesis, observation, and confirmation

- Inductive: observation, pattern, tentative hypothesis,

These two approaches to research have a different purpose and approach. Most social research involves both inductive and deductive reasoning processes at some time in the project. It may be more reasonable to look at deductive/inductive approaches as a mixed, circular approach. Since cognition can be seen as embodied in cultural artifacts and behavior, cognitive ethnography is an apt methodology for the study of learning with games, in virtual worlds, and the study of activity systems, whether they are mediated digitally or not. By using the deductive/inductive approach, and expanding observation, one can contrast and challenge theoretical arguments by testing in expanded context.

Cognitive ethnography emphasizes inductive field observation, but also uses theory in a deductive process to analyze behavior. This approach is useful to increase external validity, operationalize terms, and develop content validity through expanding a study across new designs, across different time frames, in different programs, from different observational contexts, and with different groups (Cook and Campbell, 1979; Campbell & Stanley, 1966).

Cognitive ethnography emphasizes inductive field observation, but also uses theory in a deductive process to analyze behavior. This approach is useful to increase external validity, operationalize terms, and develop content validity through expanding a study across new designs, across different time frames, in different programs, from different observational contexts, and with different groups (Cook and Campbell, 1979; Campbell & Stanley, 1966).

More specifically, cognitive ethnography emphasizes observation and key feature analysis of space, objects, concepts, actions, tools, rules, roles, and language. Study of these features can help the researcher determine the organization, transfer, and representation of information (Hutchins, 2010; 1995).

Ontology/Purpose

As stated, cognitive ethnography assumes that human cognition adapts to its natural surroundings. Therefore, the role of cognitive ethnographer is to transform observational data and interpretation into meaningful representations so that cognitive properties of the system become visible (Hutchins, 2010; 1995).

According to Hutchins (2010) study of the space where an activity takes place is a primary feature of observation in cognitive ethnography. He lists three kinds of important spaces for consideration (See Figure 2)

APPLICABILITY

Just as a book is organized to present information, games also structure narratives, and are themselves cultural artifacts containing representation of tools, rules, language, and context (Dubbels, 2008). This makes cognitive ethnography an apt methodology for the study of games, simulations, narrative, and human interaction in authentic context.

Because this emphasis on space is also indicative of current approaches to literacy (Leander, 2002; Leander & Sheehy, 2004); as well as critical science and the studied interaction between the internal world of the self and the structures found in the world, and how we communicate about them (Soja, 1996; Lefebvre, 1994); also from the tradition of ecological views on cognitive psychological perspectives (Gibson, 1986),; and in the case of the example, Discourse Processing (Zwaan, Langston, & Graesser, 1996). Because of the emphasis in ontology and purpose of the method align so closely with the variables identified in the Discourse Processing model (Zwann, Langston, & Graesser, 1996), it was applicable as a methodological approach to create a convergence of theory and tradition predicated upon an approach that aligns in purpose with analysis and question.

EXAMPLE

As an example, Dubbels (2008) used cognitive ethnography to observe video game play at an afterschool video game club. The purpose of this observation was to explore video game play as a literate practice in an authentic context. The cognitive ethnography methodology was recruited to utilize peer reviewed empirical research from laboratory studies—utilizing narrative discourse processing to interpret the key variables—to extend construct validity and observe whether the laboratory outcomes appeared in authentic, native contexts.

This allowed the researcher to interpret observations of authentic video game play in an authentic space through the lens of empirical laboratory work at an afterschool video game club.

Guiding question

The focus on space and social context, and the methodology for this example of cognitive ethnography explored a statement from O’Brien & Dubbels (2004, p. 2),

Reading is more unlike the reading students are doing outside of school than at any point in the recent history of secondary schools, and high stakes, print-based assessments are tapping skills and strategies that are increasingly unlike those that adolescents use from day to day.

These day-to-day skills and strategies were viewed as literate practice and theoretically.

They led to the guiding question:

- Can games be described as a literate practice as has been described by theoreticians?

If so, this should be apparent through:

- Observing game play

- Understanding the game narrative and controls,

- And doing analysis of interaction and behavior. Â Â Should the words behind the bullets be capitalized since you have it in sentence form?

Hypothesis

The guiding question: whether games could be viewed as a literate practice was extended to create a hypothesis to test:

- Can the literate practice of gaming be used to facilitate greater success with printed text?

The hypothesis would be tested through examination of game play narratives and printed text narratives—as described in the Nomological network section; this would be an deductive/inductive process. The use of the variables from the Event Indexing Model could be used for identifying levels of discourse and the ability to create a mental representation after the inductive observation process.

The hypothesis was predicated upon the theory that familiarity with patterns in text, from symbolic representations such as words, sentences, images, and story grammars. The story grammar being “once upon a time,†in a game might be used as a developmental analog to help struggling readers predict the structure and purpose of print narratives by helping them to expect certain events, characters, and settings and help the reader to become more efficient. In essence, they would have expectations that “once upon a time†leads to “happily ever afterâ€, and other genre patterns attributable to transmedial narrative genre patterns.

The theory is that a reader may be capable of compensation, i.e., the use genre patterns and predictive inference as higher-level process in order to support lower-level process (Stanovich, 2000). It was proposed that to develop meaningful comprehension, the propositional and situation levels might be built upon for building mental representation of printed narrative text with the game.

Context and Variables for Coding and Analysis

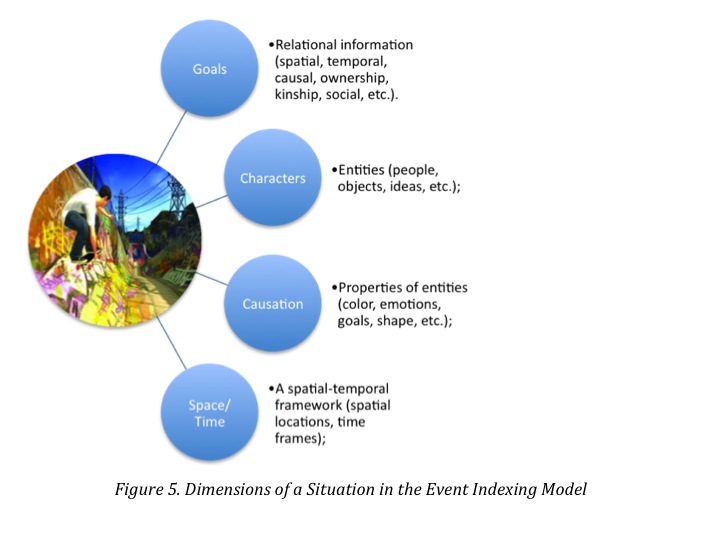

Literate activities were codified based upon a well-established model of discourse processing, The Event Indexing Model (Zwann, Langston, & Graesser, 1996). The Event Indexing Model offered five levels of discourse processing: Surface Level, Propositional Level, Situation Level, Genre Level, and Author Communication.

These levels offer an opportunity to view comprehension as a transmedial trait across discourse. The Situation Level (figure 5) is composed of two sub-levels of the variable. These are aspects of mental representation called the Dimensions of Mental Representation and are composed of: time, space, characters, causation, and goals.  These variables of the discourse-processing model were used to code the transcripts from the game club audio/video games, and context in order to explore the familiarity the students had with patterns in discourse, and their ability to recognize and process them. In order to observe the literate activities of students in their chosen medium, we offered the after school game club to students who had been selected by school district professionals for reading remediation courses outside of the mainstream. The video game play and activity space was analyzed from direct observation and analysis of audio/video recordings and photos taken during the activity.

The Situation Level (figure 5) is composed of two sub-levels of the variable. These are aspects of mental representation called the Dimensions of Mental Representation and are composed of: time, space, characters, causation, and goals.  These variables of the discourse-processing model were used to code the transcripts from the game club audio/video games, and context in order to explore the familiarity the students had with patterns in discourse, and their ability to recognize and process them. In order to observe the literate activities of students in their chosen medium, we offered the after school game club to students who had been selected by school district professionals for reading remediation courses outside of the mainstream. The video game play and activity space was analyzed from direct observation and analysis of audio/video recordings and photos taken during the activity.

Conceptual Space Analysis

Walkthroughs of the game were used to look at decision making through navigation of the game.

A Walkthrough, according to Dubbels (in Beach, Anson, Breuch, & Swiss eds, 2009), is a document that describes how to proceed through a level or particular game challenge. Walkthroughs are created by the game developer or players and often include video, audio, text, and static images—offering strategies, maps through levels, the locations of objects, and important and subtle elements of the game.

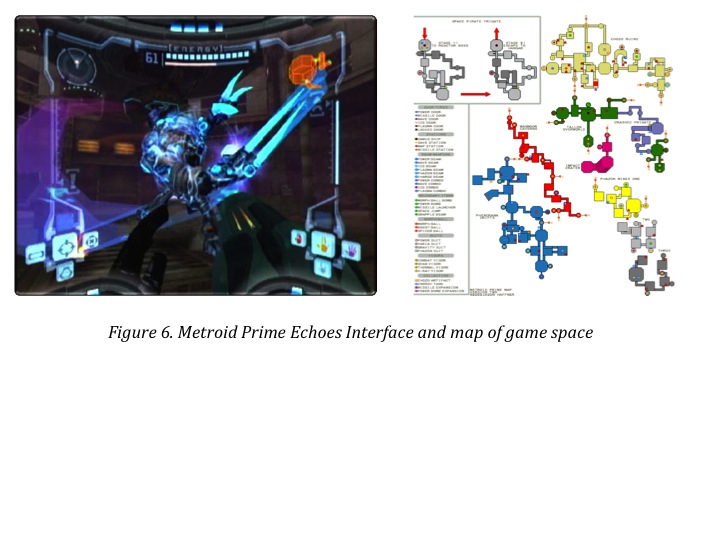

In order to have a thorough understanding of possible the goals, actions, and behaviors available in the game, a number of walkthroughs were analyzed along with the game controls, and maps for optimal play—Figure 4.

Physical Space Analysis

To create the cognitive ethnography of the video game play, two video captures were used: one to record the screen activity, and one to record player interaction with the game and play space. Because the player of the game was often highly engaged with problem solving and reacting to the game environment, there was often little-to-no dialog or variation in expression and body language – however, play was often done in the company of others. This was informative as the discussion, encouragement, and advice displayed the social and cultural knowledge of the strategies of game play. In addition, a still camera was made available for the students to take pictures for their club. This included digital pictures of the games screens and each other playing, or whatever they felt was interesting.

Social Space Analysis

The audio and video, and still images were used for analysis of the social space, as well as the physical space. However, another level of data collection involved showing the player the video recording of their play and action in the room were used for a “reflect aloud†(Ericsson & Simon, 1983) for them to describe their play and social interaction. The key feature was not only observing the play, but also identifying theories of relationships, cognition and social learning—“what were you thinking there?†was the main question asked. This dialogue served to explain the player’s reasoning and decisions without overt interpretation by the observer. This enhanced the description, and connected the naturalistic game play to the laboratory, and then back to behavior in the wild.

It was this exploration of theory that led to the study of struggling readers using video games as methods for observing levels of mental representation and recall in game play and reading. Using the Cognition Ethnographic approach allowed for comparison of students observed playing video games with friends, the dialog and behaviors that constituted game play as a literacy (Gee, 2007; Gee, Hull, & Lankshear, 1996.) and their formal academic reading behaviors. Because the boys were observed in a formal laboratory setting, it was possible to make comparisons of their game play in the informal, or wild, autonomy supporting space.

Examples of Analysis

An example of the game play observation comes from Dubbels (2008, p. 265):

Since Darius seemed to know what he was talking about, he went next, and as he played, the other boys watched and were excited with what Darius was able to do. Darius seemed happy to demonstrate what he knew. While I was recording, the boys described Darius’ play and shared ideas enthusiastically about how the game worked and looked forward to their chance to play. As Darius made a move where he showed how to do a double bomb jump, the boys watched intently. The way it was explained was that you lay a bomb, and right before that bomb explodes, set a second one, then set a third just before you reach the very top of the jump. You should fall and land said the easiest way “is to count out: 1, 2, 3, 4.â€

And he laid the bombs on 1, 3, and 4. The boys were excited about this, as well as Darius’ willingness to show them. What was clear was that Darius had not only had played the game before, and as I questioned him more later I found that he had read about it and applied what he had read. He had performed a knowledge act demonstrating comprehension.

The other boys were eager to try some of the things Darius had shown them, and Darius was happy to relinquish the controller. What happened from there was that Darius watched for a while and then walked over to the Xbox, and then to the bank of computers. I left the camera to record the boys paying Metroid Prime and I walked over to see what Darius was doing. He showed me a site on the Internet where he was reading about the game. He had gone to a fan site where another gamer had written a record of what each section of the game was like, what the challenges were, cool things to do, and cool things to find. I asked him if this was cheating; he said “maybe†and smiled. He said that it made the game more fun and that he could find more “cool stuff†and it helps him to understand how to win easier and what to look for.

This idea of secondary sources to better understand the game makes a lot of sense to me. It is a powerful strategy that informs comprehension as described previously in this chapter. The more prior knowledge a person has before reading or playing, the more likely they are to comprehend it fully. Secondary sources can help the player by supporting them in preconceiving the dimensions of Level 3 in the comprehension model, and with that knowledge, the player may have an understanding of what to expect, what to do, and where to focus attention for better success. Darius has clearly displayed evidence that he knows what it takes to be a competent comprehender He had clearly done the work in looking for secondary sources and was motivated to read with a specific purpose—to know what games he wants to try and to be good at those games. His use of secondary sources showed that he was able to draw information from a variety of sources, synthesize them, and apply his conclusion with practice to see if it works.

One of the key features of the cognitive ethnography is the realization that even the smallest of human activities are loaded with interesting cognitive phenomena. In order to do this correctly, one should choose an activity setting for observation, establish rapport, and record what is happening to stop the action for closer scrutiny. This can be done with photos, video, audio recording, and notebook. The key feature is event segmentation, structure in the events, and then interpretation.

As was presented in the passage from Dubbels (2008), analysis was done describing the social network surrounding the game play of one boy describing the different spaces, and the behaviors of the boys surrounding him. The link to game play and strategy for successfully navigating the video game can be considered an analog to how young people read print text when a model is used as a framework for analysis.

One can then connect the cultural organization with the observed processes of meaning making. This allows patterns and coherence in the data to become visible through identification of logical relations and cultural schemata. This allowed for description of engaged learning when the video students approached the game, their social relations, and how they managed the information related to success in the game, reading the directions, taking direction from others, secondary sources, and development of comprehension during discourse processing compared to the laboratory setting.

In order to see if there was transfer, students were asked to work with the investigator in a one-on-one read aloud in a laboratory setting. The student was asked to read a short novel, Seed People, to the investigator for parallels and congruency between interaction of narratives found in game play, and traditional print-based narratives found in the classroom.

What I noticed in talking to them about Seed People was that they would read without stopping. They would just roll right through the narrative until I would ask them to stop and tell me about what they thought was going on, with no thought of looking at the situations and events that framed each major scene, and then connecting these scenes as a coherent whole as is described earlier in the chapter as an act of effective comprehension.

In one case Stephen made interesting connections between what he saw with an older boy in the story and the struggles his brother was having in real life. I just wondered if he would have made that connection if I had not stopped at the close of that event to talk about it and make connections. This ability to chunk events and make connections, as situations change and the mental representation are updated, is important for transition points in the incremental building of a comprehensive model of a story or experience.

When working to teach reading with this information, it is important to connect to prior knowledge and build and compare the new information to prior situation models or prior experience. Consider a storyboard or a comic strip where each scene is defined and then the next event is framed. Readers need to learn to create these frames when comprehending text. Each event in a text should then be integrated and developed as an evolution of ideas presented as each scene builds with new information; the model is updated and expanded.

If the event that is currently being processed overlaps with the events in working memory on a particular dimension, then a link between those events is established, then a link between those events is stored in long-term memory. Overlap is determined based on two events sharing an index (i.e., a time, place, protagonist, cause, or goal). (Goldman, Graesser, & van den Broek, 1999, p. 94)

In this instance with Stephen, there were many opportunities for analysis with the spaces described by Hutchins. The boy made connections to family outside of the novel, to his brother, to make it meaningful and also chunk a large section of the book as an event he could relate to. There was also the description of the setting, where Stephen was not pausing or processing the narrative in his reading. The activity did not include any social learning or modeling from friends and contemporaries, but resonated the controlled formal environment of school.

Thus, it was concluded that we must build our understanding in multiple spaces. The attributes of the situation model were made much more robust and much more easily accessible when prior knowledge was recruited and connected with the familiar..

Two types of prior knowledge support this in the Event Indexing Model:

• General world knowledge (pan-situational knowledge about concept types, e.g., scripts, schemas, categories, etc.), and

• Referent specific knowledge (pan-situational knowledge about specific entities).

These two categories represent experience in the world and literary elements used in defining genre and style as described from the Event Indexing Model. The theory posits that if a reader has more experience with the world that can be tapped into, and also knowledge and experience about the structure of stories, he or she is more likely to have a deeper understanding of the passage. In the case of the game players, it was seen to be important for seeking secondary sources, as well as copying the modeled behavior of successful players like Darius and segmenting action into manageable events. This was also evident when the students were asked to read aloud print text from the Seed People novel. The students, like Stephen showed they had difficulty segmenting events, or situations, just like they had difficulty with game play.

Of the fourteen regular students in the club, only two were successful with the games. After further interview and analysis, the two successful gamers, who showed awareness of game story grammar and narrative patterns were found to lack confidence in printed text. However, they were able to leverage the narrative awareness strategies from games to leverage print text form secondary sources in order to help them successfully p;ay the games. Conversely, the twelve students who struggled had to learn the help seeking strategies and narrative awareness.

CONCLUSION

For this study, cognitive ethnography was an appropriate methodology as it allowed for observation and analysis of the social and cultural context to inform the cognitive approach taken by the game players. It improved external validity from the laboratory study by applying the same construct to a new time, place, group, and methodology. The cognitive ethnography methodology presents an opportunity to move between inductive and deductive inquiry and observation to build a Nomological network. The cognitive ethnography methodology can provide opportunity to extend laboratory findings into authentic, autonomy supporting contexts, and opportunities to understand the social and cultural behaviors that surround the activities–thus increasing generalizability. This opportunity to use hypothesis testing in an authentic setting can provide a more suitable methodology for usability and translation for other contexts like the classroom, professional development, product design, and leisure studies.

References

Campbell, D.T., Stanley, J.C. (1966). Experimental and Quasi-Experimental Designs for Research. Skokie, Il: Rand McNally.

Cronbach, L. and Meehl, P. (1955). Construct validity in psychological tests, Psychological Bulletin, 52, 4, 281-302.

Cook, T.D. and Campbell, D.T. Quasi-Experimentation: Design and Analysis Issues for Field Settings. Houghton Mifflin, Boston, 1979)

Deci, E. L., & Ryan, R.M. (1985). Intrinsic motivation and self-determination in human behavior. New York: Plenum Press.

Dubbels, B.R. (2008). Video games, reading, and transmedial comprehension. In R. E. Ferdig (Ed.), Handbook of research on effective electronic gaming in education. Information Science Reference.

Dubbels, B.R. (2009). Analyzing purposes and engagement through think-aloud protocols in video game playing to promote literacy. Paper presented at the National Reading Conference, Orlando, FL.

Dubbels, B. (2009). Students’ blogging about their video game experience.  In R. Beach, C. Anson, L. Breuch, & Swiss, T. (Eds.)  Engaging Students in Digital Writing.  Norwood, MA:

Christopher Gordon.

Ericsson, K., & Simon, H. (1993). Protocol analysis: verbal reports as data (2nd ed.). Boston: MIT Press.

Gee, J. P. (2007). Good video games + good learning. New York: Peter Lang.

Gee, J., Hull, G., and Lankshear, C. (1996). The new work order: Behind the language of the new capitalism. Boulder, CO: Westview.

Gibson, J. J. (1986). The Ecological Approach to Visual Perception. Hillsdale, New

Jersey: Erlbaum

Hutchins, E. (1996). Cognition in the wild. Boston: MIT Press.

Hutchins, E. (2010). Two types of cognition. Retrieved August 15, 2010, from http://hci.ucsd.edu/102b.

Leander, K. (2002). Silencing in classroom interaction: Producing and relating social spaces. Discourse Processes, 34(2), 193–235.

Leander, K., and Sheehy, M. (Eds). (2004). Spatializing literacy research and practice. New York: Peter Lang.

Lefebvre, H. (1991). The production of space. Cambridge, MA: Blackwell.

O’Brien, D.G. & Dubbels, B. (2004). Reading-to-Learn: From print to new digital media and new literacies. Prepared for National Central Regional Educational Laboratory. Learning Point Associates.\

Soja, E. (1989). Postmodern geographies: The reassertion of space in critical social theory. London: Verso.

Soja, E. (1996). Thirdspace: Journeys to Los Angeles and Other Real-and-Imagined Places. Malden, MA: Blackwell.

Stanovich, K.E. (2000). Progress in understanding reading. New York: Guilford Press.

Zwaan, R.A., Langston, M.C., & Graesser, A.C. (1995). The construction of situation models in narrative comprehension: an event-indexing model. Psychological Science, 6, 292-297.

Zwaan, R.A., & Radvansky, G.A. (1998). Situation models in language comprehension and memory. Psychological Bulletin, 123, 162-185.

|

|

Developmental Changes in the Visual Span for ReadingMiYoung Kwon,a Gordon E. Legge,a and Brock R. Dubbelsb

a Department of Psychology, University of Minnesota, Elliott Hall, 75 East River Rd. Minneapolis, MN 55455 USA

b College of Education & Human Development, University of Minnesota, Burton Hall, 178 Pillsbury Dr., Minneapolis MN 55455 USA